Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Find the pre-requisites for deploying DIGIT platform services on AWS

AWS account with admin access to provision infrastructure. You will need a paid subscription to the AWS.

Install kubectl (any version) on the local machine - it helps interact with the Kubernetes cluster.

Install Helm - this helps package the services, configurations, environments, secrets, etc into Kubernetes manifests. Verify that the installed version of helm is equal to 3.0 or higher.

Refer to tfswitch documentation for different platforms. Terraform version 0.14.10 can be installed directly as well.

5. Run tfswitch and it will show a list of terraform versions. Scroll down and select terraform version (0.14.10) for the Infra-as-code (IaC) to provision cloud resources as code. This provides the desired resource graph and helps destroy the cluster in one go.

Install Golang

For Linux: Follow the instructions here to install Golang on Linux.

For Windows: Download the installer using the link here and follow the installation instructions.

For Mac: Download the installer using the link here and follow the installation instructions.

Install cURL - for making API calls

Install Visual Studio Code - for better code visualization/editing capabilities

Install Postman - to run digit bootstrap scripts

Install AWS CLI

Steps to setup the AWS account for deployment

Follow the details below to set up your AWS account before you proceed with the DIGIT deployment.

Once the command line access is configured, everything is set to proceed with the terraform to provision the DIGIT Infra-as-code.

If you have any questions please write to us.

Make sure to use the appropriate discussion category and labels to address the issues better.

The pre-requisites for deploying on Azure

The Azure Kubernetes Service (AKS) is one of the Azure services for deploying, managing and scaling any distributed and containerized workloads, here we can provision the AKS cluster on Azure from the ground up using terraform (infra-as-code) and then deploy the DIGIT platform services as config-as-code using Helm.

Know about AKS: https://www.youtube.com/watch?v=i5aALhhXDwc&ab_channel=DevOpsCoach

Know what is terraform: https://youtu.be/h970ZBgKINg

Azure subscription: If you don't have an Azure subscription, create a free account before you begin.

Install Azure CLI

Configure Terraform: Follow the directions in the article, Terraform and configure access to Azure

Azure service principal: Follow the directions in the Create the service principal section in the article, Create an Azure service principal with Azure CLI. Take note of the values for the appId, displayName, password, and tenant.

Install kubectl on your local machine which helps you interact with the Kubernetes cluster.

Install Helm that helps you package the services along with the configurations, environments, secrets, etc into Kubernetes manifests.

Pre-requisites for deployment on SDC

Check the hardware and software pre-requisites for deployment on SDC.

Kubernetes nodes

Ubuntu 18.04

SSH

Privileged user

Python

Bastion machine

Ansible

Git

Python

Steps to setup CI/CD on SDC

Kubespray is a composition of Ansible playbooks, inventory, provisioning tools, and domain knowledge for generic OS/Kubernetes cluster configuration management tasks. Kubespray provides:

a highly available cluster

composable attributes

support for most popular Linux distributions

continuous-integration tests

Fork the repos below to your GitHub Organization account

Go lang (version 1.13.X)

Install kubectl on your local machine to interact with the Kubernetes cluster.

Install Helm to help package the services along with the configurations, environment, secrets, etc into Kubernetes manifests.

One Bastion machine to run Kubespray

HA-PROXY machine which acts as a load balancer with Public IP. (CPU: 2Core , Memory: 4Gb)

one machine which acts as a master node. (CPU: 2Core , Memory: 4Gb)

one machine which acts as a worker node. (CPU: 8Core , Memory: 16Gb)

ISCSI volumes for persistence volume. (number of quantity: 2 )

kaniko-cache-claim:- 10Gb

Jenkins home:- 100Gb

Kubernetes nodes

Ubuntu 18.04

SSH

Privileged user

Python

Bastion machine

Ansible

git

Python

Run and follow instructions on all nodes.

Ansible needs Python to be installed on all the machines.

apt-get update && apt-get install python3-pip -y

All the machines should be in the same network with ubuntu or centos installed.

ssh key should be generated from the Bastion machine and must be copied to all the servers part of your inventory.

Generate the ssh key ssh-keygen -t rsa

Copy over the public key to all nodes.

Clone the official repository

Install dependencies from requirements.txt

Create Inventory

where mycluster is the custom configuration name. Replace with whatever name you would like to assign to the current cluster.

Create inventory using an inventory generator.

Once it runs, you can see an inventory file that looks like the below:

Review and change parameters under inventory/mycluster/group_vars

Deploy Kubespray with Ansible Playbook - run the playbook as Ubuntu

The option --become is required, for example writing SSL keys in /etc/, installing packages and interacting with various system daemons.

Note: Without --become - the playbook will fail to run!

Kubernetes cluster will be created with three masters and four nodes with the above process.

Kube config will be generated in a .Kubefolder. The cluster can be accessible via kubeconfig.

Install haproxy package in a haproxy machine that will be allocated for proxy

sudo apt-get install haproxy -y

IPs need to be whitelisted as per the requirements in the config.

sudo vim /etc/haproxy/haproxy.cfg

Iscsi volumes will be provided by the SDC team as per the requisition and the same can be used for statefulsets.

Refer to the doc here.

Deploy DIGIT using Kubespray

Kubespray is a composition of Ansible playbooks, inventory, provisioning tools, and domain knowledge for generic OS/Kubernetes cluster configuration management tasks. Kubespray provides:

a highly available cluster

composable attributes

support for most popular Linux distributions

continuous-integration tests

Before we can get started, we need a few prerequisites to be in place. This is what we are going to need:

A host with Ansible installed. Click here to learn more about Ansible. Find the Ansible installation details here.

You should also set up an SSH key pair to authenticate to the Kubernetes nodes without using a password. This permits Ansible to perform optimally.

Few servers/hosts/VMs to serve as our targets to deploy Kubernetes. I am using Ubuntu 18.04, and my servers each have 4GB RAM and 2vCPUs. This is fine for my testing purposes, which I use to try out new things using Kubernetes. You need to be able to SSH into each of these nodes as root using the SSH key pair I mentioned above.

The above will do the following:

Create a new Linux User Account for use with Kubernetes on each node

Install Kubernetes and containers on each node

Configure the Master node

Join the Worker nodes to the new cluster

Ansible needs Python to be installed on all the machines.

apt-get update && apt-get install python3-pip -y

All the machines should be in the same network with Ubuntu or Centos installed.

ssh key should be generated from the Bastion machine and must be copied to all the servers part of your inventory.

Generate the ssh key ssh-keygen -t rsa

Copy over the public key to all nodes.

Clone the official repository

Install dependencies from requirements.txt

Create Inventory

where mycluster is the custom configuration name. Replace with whatever name you would like to assign to the current cluster.

Create inventory using an inventory generator.

Once it runs, you can see an inventory file that looks like the below:

Review and change parameters under inventory/mycluster/group_vars

Deploy Kubespray with Ansible Playbook - run the playbook as Ubuntu

The option --become is required for example writing SSL keys in /etc/, installing packages and interacting with various system daemons.

Note: Without --become - the playbook will fail to run!

Kubernetes cluster will be created with three masters and four nodes using the above process.

Kube config will be generated in a .Kubefolder. The cluster can be accessible via kubeconfig.

Install haproxy package in a haproxy machine that will be allocated for proxy

sudo apt-get install haproxy -y

IPs need to be whitelisted as per the requirements in the config.

sudo vim /etc/haproxy/haproxy.cfg

Iscsi volumes will be provided by the SDC team as per the requisition and the same can be used for statefulsets.

Note: Please refer to the DIGIT deployment documentation to deploy DIGIT services.

Deployment on SDC

Running Kubernetes on-premise gives a cloud-native experience on SDC when it comes to deploying DIGIT.

Whether States have their own on-premise data centre or have decided to forego the various managed cloud solutions, there are a few things one should know when getting started with on-premise K8s.

One should be familiar with Kubernetes and the control plane consists of the Kube-apiserver, Kube-scheduler, Kube-controller-manager and an ETCD datastore. For managed cloud solutions like Google’s Kubernetes Engine (GKE) or Azure’s Kubernetes Service (AKS), it also includes the cloud-controller-manager. This is the component that connects the cluster to external cloud services to provide networking, storage, authentication, and other support features.

To successfully deploy a bespoke Kubernetes cluster and achieve a cloud-like experience on SDC, one needs to replicate all the same features you get with a managed solution. At a high level, this means that we probably want to:

Automate the deployment process

Choose a networking solution

Choose a right storage solution

Handle security and authentication

The subsequent sections look at each of these challenges individually, and provide enough of a context required to help in getting started.

Using a tool like Ansible can make deploying Kubernetes clusters on-premise trivial.

When deciding to manage your own Kubernetes clusters, we need to set up a few proofs-of-concept (PoC) clusters to learn how everything works, perform performance and conformance tests, and try out different configuration options.

After this phase, automating the deployment process is an important if not necessary step to ensure consistency across any clusters you build. For this, you have a few options, but the most popular are:

kubeadm: a low-level tool that helps you bootstrap a minimum viable Kubernetes cluster that conforms to best practices

kubespray: an Ansible playbook that helps deploy production-ready clusters

If you already using Ansible, Kubespray is a great option, otherwise, we recommend writing automation around Kubeadm using your preferred playbook tool after using it a few times. This will also increase your confidence and knowledge of Kubernetes.

Provision infra for DIGIT on AWS using Terraform

Amazon Elastic Kubernetes Service (EKS) is an AWS service for deploying, managing, and scaling distributed and containerized workloads. With EKS, you can easily provision a cluster on AWS using Terraform, which automates the process. Then, deploy the DIGIT services configuration using Helm.

Know about EKS: https://www.youtube.com/watch?v=SsUnPWp5ilc

Know what is terraform: https://youtu.be/h970ZBgKINg

Setup infrastructure required for deploying DIGIT

DIGIT can be deployed on a public cloud like AWS, Azure or a private cloud.

Learn the basics of Kubernetes: https://www.youtube.com/watch?v=PH-2FfFD2PU&t=3s

Learn the basics of kubectl commands

Note: To deploy DIGIT using Github Actions, refer to the document - DIGIT Deployment Using GithubActions. With this installation approach, there's no need to manually create the infrastructure as GitHub Actions will automatically handle the creation and deployment of DIGIT.

Choose your cloud and follow the instructions to set up a Kubernetes cluster before deploying.

The image below illustrates the multiple components deployed. These include the EKS, Worker Nodes, Postgres DB, EBS Volumes, and Load Balancer.\

Clone the DIGIT-DevOps repository:

Navigate to the cloned repository and checkout the release-1.28-Kubernetes branch:

Check if the correct credentials are configured using the command below. Refer to the attached doc to setup AWS Account on the local machine.

Make sure that the above command reflects the set AWS credentials. Proceed once the details are confirmed. (If the credentials are not set follow Step 2 Setup AWS account )

Choose either method below to generate SSH key pairs

a. Use an online website (not recommended in a production setup. To be only used for demo setups): https://8gwifi.org/sshfunctions.jsp

b. Use openssl:

Add the public key to your GitHub account.

Open input.yaml file in vscode. Use the below code to open it in VS code:

code infra-as-code/terraform/sample-aws/input.yaml

If the command does not work, open the file in VS code manually. Once the file is open, fill in the inputs. (If you are not using vscode, open it in any editor of your choice).

Fill in the inputs as per the regex mentioned in the comments.

Go to infra-as-code/terraform/sample-aws and run init.go script to enrich different files based on input.yaml.

Once we are complete declaring the resources, begin with deploying all resources.

Run the terraform scripts to provision infra required to Deploy DIGIT on AWS.

CD (change directory) to the following directory and run the below commands to create the remote state.

Once the remote state is created, it is time to provision DIGIT infra. Run the below commands:

Important:

DB password is asked for in the application stage. Remember the password you have provided. It should be at least 8 characters long. Otherwise, RDS provisioning will fail.

The output of the apply command will be displayed on the console. Store this in a file somewhere. Values from this file will be used in the next step of deployment.

2. Use this link to get the kubeconfig from EKS for the cluster. The region code is the default region provided in the availability zones in variables.tf. For example - ap-south-1. EKS cluster name also should've been filled in variables.tf.

3. Verify that you can connect to the cluster by running the following command

At this point, your basic infra has been provisioned.

Note: Refer to the DIGIT deployment documentation to deploy DIGIT services.

To destroy the previously created infrastructure with Terraform, run the command below:

ELB is not deployed via Terraform. ELB was created at deployment time by the setup of Kubernetes Ingress. This has to be deleted manually by deleting the ingress service.

kubectl delete deployment nginx-ingress-controller -n <namespace>

kubectl delete svc nginx-ingress-controller -n <namespace>

Note: Namespace can be either egov or jenkins.

Delete S3 buckets manually from the AWS console and verify if ELB got deleted.

In case of if ELB is not deleted, you need to delete ELB from the AWS console.

Run terraform destroy.

Sometimes all artefacts associated with a deployment cannot be deleted through Terraform. For example, RDS instances might have to be deleted manually. It is recommended to log in to the AWS management console and look through the infra to delete any remnants.

Provision infra for DIGIT on Azure using Terraform

Azure Kubernetes Service (AKS) manages your hosted Kubernetes environment. AKS allows you to deploy and manage containerized applications without container orchestration expertise. AKS also enables you to do many common maintenance operations without taking your app offline. These operations include provisioning, upgrading, and scaling resources on demand.

This guide takes you through a step by step process for installing DIGIT Core. Installation consists of provisioning and configuring the hardware and network, installing the backbone services like PostgresDB, ElasticSearch, Apache Kakfa etc and DIGIT core services i.e. API Gateway, User Service etc. We also install a sample product built on top of the DIGIT core called Public Grievance Redressal (PGR). DIGIT Core can be installed on public cloud like AWS, Azure or on Private Cloud. Installing DIGIT on personal computers is not recommended.

Follow the steps outlined in the below below sections to install DIGIT.

To deploy the solution to the cloud there are several ways that we can choose. In this case, we will use terraform Infra-as-code.

Terraform is an open-source infrastructure as code (IaC) software tool that allows DevOps engineers to programmatically provision the physical resources an application requires to run.

Infrastructure as code is an IT practice that manages an application's underlying IT infrastructure through programming. This approach to resource allocation allows developers to logically manage, monitor and provision resources -- as opposed to requiring that an operations team manually configure each required resource.

Terraform users define and enforce infrastructure configurations by using a JSON-like configuration language called HCL (HashiCorp Configuration Language). HCL's simple syntax makes it easy for DevOps teams to provision and re-provision infrastructure across multiple clouds and on-premises data centres.

Before we provision the cloud resources, we need to understand and be sure about what resources need to be provisioned by Terraform to deploy DIGIT. The following picture shows the various key components. (AKS, Node Pools, Postgres DB, Volumes, Load Balancer)

Ideally, one would write the terraform script from scratch using this doc.

Here we have already written the terraform script that one can reuse/leverage that provisions the production-grade DIGIT Infra and can be customized with the user-specific configuration.

Clone the following DIGIT-DevOps where we have all the sample terraform scripts available for you to leverage.

2. Change the main.tf according to your requirements.

3. Declare the variables in variables.tf

Save the file and exit the editor.

4. Create a Terraform output file (output.tf) and paste the following code into the file.

Once you have finished declaring the resources, you can deploy all resources.

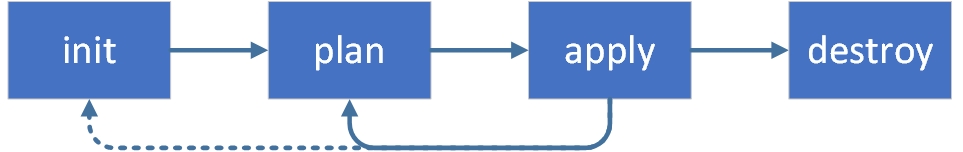

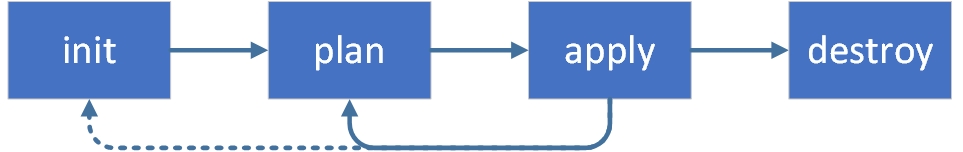

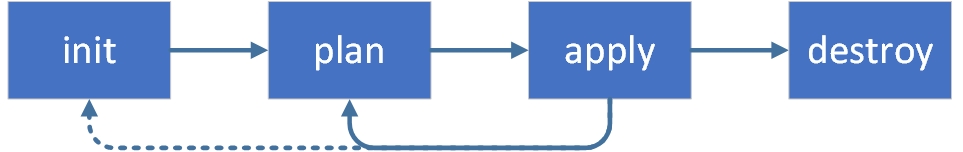

terraform init: command is used to initialize a working directory containing Terraform configuration files.

terraform plan: command creates an execution plan, which lets you preview the changes that Terraform plans to make to your infrastructure.

terraform apply: command executes the actions proposed in a Terraform plan to create or update infrastructure.

After the complete creation, you can see resources in your Azure account.

Now we know what the terraform script does, the resources graph that it provisions and what custom values should be given with respect to your environment. The next step is to begin to run the terraform scripts to provision infra required to Deploy DIGIT on Azure.

Use the CD command to move into the following directory run the following commands 1-by-1 and watch the output closely.

The Kubernetes tools can be used to verify the newly created cluster.

Once Terraform Apply execution is complete, it generates the Kubernetes configuration file or you can get it from Terraform state.

Use the below command to get kubeconfig. It will automatically store your kubeconfig in .kube folder.

3. Verify the health of the cluster.

The details of the worker nodes should reflect the status as Ready for All. Note: Please refer to the DIGIT deployment documentation to deploy DIGIT services.

This page details the steps to deploy the core platform services and reference applications.

The steps here can be used to deploy:

DIGIT core platform services

Public Grievance & Redressal module

Trade Licence module

Property Tax module

Water & Sewerage module etc.

DIGIT uses (required v1.13.3) automated scripts to deploy the builds onto Kubernetes - or or

All DIGIT services are packaged using helm charts

is a CLI to connect to the Kubernetes cluster from your machine

Install for making API calls

IDE Code for better code/configuration editing capabilities

to run digit bootstrap scripts

Once all the deployments configs are ready, run the command given below. Input the necessary details as prompted on the screen and the interactive installer will take care of the rest.

All done, wait and watch for 10 min. The DIGIT setup is complete, and the application will run on the URL.

Note:

If you do not have your domain yet, you can edit the host file entries and map the nginx-ingress-service load balancer id like below

When you find it, add the following lines to the host file, save and close it.

aws-load-balancer-id digit.try.com

You can now test the DIGIT application status in the command prompt/terminal using the command below.

Run the egov-deployer golang script from the .

If you have a GoDaddy account or similar and a DNS records edit access you can map the load balancer id to desired DNS. Create a record with the load balancer ID and domain.

Provision and Configure Infrastructure

Deploy DIGIT Backbone and Core Services

This documentation provides a detailed explanation of how to create a new helm chart using common templates and deploy it using helmfile.

helm

Clone the DIGIT-DevOps repository using the below command and checkout to the DIGIT-2.9LTS branch.

Navigate to the common_chart_template chart

Edit the chart.yaml with your service_name and update the dependency chart path.

Now, edit the values.yaml file to override the values present in the common chart and the values need to be provided for your service. If your service doesn't depend on db then you can disable the DB migration by setting the value enable as false. Also if you are using your docker account you need to update the docker container in the below values.yaml file.

After making these changes you need to provide this chart configuration in helmfile.yaml file

Steps to prepare the deployment configuration file

It's important to prepare a global deployment configuration yaml file that contains all necessary user-specific custom values like URL, gateways, persistent storage ids, DB details etc.

Know the basics of Kubernetes: https://www.youtube.com/watch?v=PH-2FfFD2PU&t=3s

Know the basics of kubectl commands

Know kubernetes manifests: https://www.youtube.com/watch?v=ohSUtEfDefc

Know how to manage env values, secrets of any service deployed in kubernetes https://www.youtube.com/watch?v=OW244LxB4oI

Know how to port forward to a pod running inside k8s cluster and work locally https://www.youtube.com/watch?v=TT3nd5n5Yus

Know sops to secure your keys/creds: https://www.youtube.com/watch?v=DWzJ87KbwxA

Post-Kubernetes Cluster setup, the deployment consists of 2 stages. As part of this sample exercise, we can deploy PGR and show the required configurations. The deployment steps are similar for all other modules except that the prerequisites differ depending on required features like SMS Gateway, Payment Gateway, etc.

Navigate to the following file in your local machine from the previously cloned DevOps git repository.

Step 1: Clone the following DIGIT-DevOps repo (If not already done as part of Infra setup), you may need to install git and then run git clone it to your machine.

$ git clone -b digit-lts-go https://github.com/egovernments/DIGIT-DevOps

Step 2: After cloning the repo CD to the DIGIT-DevOps folder, type the "code" command to open the visual editor and open all the files from the DIGIT-DevOps repo.

Step 3: Update the deployment config file with your details, you can use the following template egov-demo-sample.

Replace the following as per the applicable values -

Important: Add your domain name here, which you want to use for accessing DIGIT. ( Do not use the dummy domain )

SMS gateway to receive OTP, transaction mobile notification, etc.

MDMS, Config repo URL, here is where you provide master data, tenants and various user/role access details.

GMap key for the location service

Payment gateway, in case you use PT, TL, etc

Step 4: Update your credentials and sensitive data in the secret file as per your details.

credentials, secrets (You need to encrypt using sops and create a <env>-secret.yaml separately)

SOPS expects an encryption key to encrypt/decrypt a specified plain text and keep the details secured. The following are the options to generate the encryption key -

Option 1: Generate PGP keys https://fedingo.com/how-to-generate-pgp-key-in-ubuntu

Option 2: Create AWS KMS keys when you want to use the AWS cloud provider.

Once you generate your encryption key, create a .sops.yaml configuration file under the /helm directory of the cloned repo to define the keys used for specific files. Refer to the SOP doc for more details.

Note: For demo purposes, you can use the egov-demo-secrets.yaml as it is without sops configuration, but make sure you update your specific details like Git SSH, URL etc. When you decide to push these configurations into any git or public space, make sure you follow the sops configuration mentioned in this article sops to encrypt your secrets.

Step 5: Important: Fork the following repos that contain the master data and default configs which you would customize as per your specific implementation later. Like (Master Data, ULB, Tenant details, Users, etc) to your respective GitHub organization account.

Once you fork the repos into your GitHub organization account, Create a github user account, generate an SSH authentication key generate new SSH key and add it to above user account.

New GitHub users should be enabled to access the earlier forked repos.

Add the ssh private key that you generated in the previous step to egov-demo-secrets.yaml under the git-sync section.

Modify the services git-Sync repo and branch with your fork repo and branch in egov-demo.yaml.

Step 6: Update the deployment configs for the below as per your specification:

Number of replicas/scale of each service (depending on whether dev or prod load).

Update the SMS Gateway, Email Gateway, and Payment Gateway details for the notification and payment gateway services, etc.

Update the config, MDMS GitHub repos wherever marked

Update GMap key (In case you are using Google Map services in your PGR, PT, TL, etc)

Create one private S3 bucket for Filestore and one public bucket for logos. Add the bucket details respectively and create an IAM user with the s3 bucket access. Add IAM user details to <env-secrets.yaml>.

URL/DNS on which the DIGIT will be exposed.

SSL certificate for the above URL.

Any specific endpoint configs (Internal/external).

This tutorial will walk you through on how to setup CI/CD

Terraform helps you build a graph of all your resources and parallelizes the creation or modification of any non-dependent resources. Thus, Terraform builds infrastructure as efficiently as possible while providing the operators with clear insight into the dependencies on the infrastructure.

Fork the repos below to your GitHub Organization account

Go lang (version 1.13.X)

AWS account with admin access to provision EKS Service. Try subscribing to a free AWS account to learn the basics. There is a limit on what is offered as free. This demo requires a commercial subscription to the EKS service. The cost for a one or two days trial might range between Rs 500-1000. (Note: Post the demo, for the internal folks, eGov will provide a 2-3 hrs time-bound access to eGov's AWS account based on the request and the available number of slots per day).

Install kubectl on your local machine to interact with the Kubernetes cluster.

Install Helm to help package the services along with the configurations, environment, secrets, etc into a Kubernetes manifests.

Install terraform version (0.14.10) for the Infra-as-code (IaC) to provision cloud resources as code and with desired resource graph. It also helps destroy the cluster in one go.

Install AWS CLI on your local machine so that you can use AWS CLI commands to provision and manage the cloud resources on your account.

Install AWS IAM Authenticator to help authenticate your connection from your local machine and deploy DIGIT services.

Use the AWS IAM User credentials provided for the Terraform (Infra-as-code) to connect to the AWS account and provision the cloud resources.

You will receive a Secret Access Key and Access Key ID. Save the keys.

Open the terminal and run the command given below. The AWS CLI is already installed and the credentials are saved. (Provide the credentials, leave the region and output format blank).

The above creates the following file on your machine as /Users/.aws/credentials.

Before we provision the cloud resources, we need to understand and be sure about what resources need to be provisioned by terraform to deploy CI/CD.

The following is the resource graph that we are going to provision using terraform in a standard way so that every time and for every environment, the infra is the same.

EKS Control Plane (Kubernetes master)

Work node group (VMs with the estimated number of vCPUs, Memory)

EBS Volumes (Persistent volumes)

VPCs (Private networks)

Users to access, deploy and read-only

Ideally, one would write the terraform script from scratch using this doc.

Here we have already written the terraform script that provisions the production-grade DIGIT Infra and can be customized with the specified configuration.

Clone the DIGIT-DevOps GitHub repo. The terraform script to provision the EKS cluster is available in this repo. The structure of the files is given below.

Here, you will find the main.tf under each of the modules that have the provisioning definition for resources like EKS cluster, storage, etc. All these are modularized and react as per the customized options provided.

Example:

VPC Resources -

VPC

Subnets

Internet Gateway

Route Table

EKS Cluster Resources -

IAM Role to allow EKS service to manage other AWS services

EC2 Security Group to allow networking traffic with the EKS cluster

EKS Cluster

EKS Worker Nodes Resources -

IAM role allowing Kubernetes actions to access other AWS services

EC2 Security Group to allow networking traffic

Data source to fetch the latest EKS worker AMI

AutoScaling launch configuration to configure worker instances

AutoScaling group to launch worker instances

Storage Module -

Configuration in this directory creates EBS volume and attaches it together.

The following main.tf with create s3 bucket to store all the states of the execution to keep track.

The following main.tf contains the detailed resource definitions that need to be provisioned.

Dir: DIGIT-DevOps/Infra-as-code/terraform/egov-cicd

Define your configurations in variables.tf and provide the environment-specific cloud requirements. The same terraform template can be used to customize the configurations.

Following are the values that you need to mention in the following files. The blank ones will prompt for inputs during execution.

We have covered what the terraform script does, the resources graph that it provisions and what custom values should be given with respect to the selected environment.

Now, run the terraform scripts to provision the infra required to Deploy DIGIT on AWS.

Use the 'cd' command to change to the following directory and run the following commands. Check the output.

After successful execution, the following resources get created and can be verified by the command "terraform output".

s3 bucket: to store terraform state

Network: VPC, security groups

IAM users auth: using keybase to create admin, deployer, the user

Use the URL https://keybase.io/ to create your own PGP key. This creates both public and private keys on the machine, upload the public key into the keybase account that you have just created, give a name to it and ensure that you mention that in your terraform. This allows you to encrypt sensitive information.

Example: Create a user keybase. This is "egovterraform" in the case of eGov. Upload the public key here - https://keybase.io/egovterraform/pgp_keys.asc

Use this portal to Decrypt the secret key. To decrypt the PGP message, upload the PGP Message, PGP Private Key and Passphrase.

EKS cluster: with master(s) & worker node(s).

Storage(s): for es-master, es-data-v1, es-master-infra, es-data-infra-v1, zookeeper, kafka, kafka-infra.

Use this link to get the kubeconfig from EKS to fetch the kubeconfig file. This enables you to connect to the cluster from your local machine and deploy DIGIT services to the cluster.

Finally, verify that you are able to connect to the cluster by running the command below:

Whola! All set and now you can Deploy Jenkins

Post infra setup (Kubernetes Cluster), we start with deploying the Jenkins and kaniko-cache-warmer.

Sub domain to expose CI/CD URL

GitHub Oauth App (this provides you with the clientId, clientSecret)

Under Authorization callback URL enter the below url ie (Replace <domain_name> with your domain) https://<domain_name>/securityRealm/finishLogin

Generate a new ssh key for the above user (this provides the ssh public and private keys)

Add the earlier created ssh public key to GitHub user account

Add ssh private key to the gitReadSshPrivateKey

With previously created GitHub users generate a personal read-only access token

Docker hub account details (username and password)

SSL certificate for the sub-domain

Prepare an <ci.yaml> master config file and <ci-secrets.yaml>. Name this file as desired. It has the following configurations:

credentials, secrets (you need to encrypt using sops and create a ci-secret.yaml separately)

Add subdomain name in ci.yaml

Check and add your project specific ci-secrets.yaml details (like github Oauth app clientId, clientSecret, gitReadSshPrivateKey, gitReadAccessToken, dockerConfigJson, dockerUsername and dockerPassword)

To create a Jenkins namespace mark this flag true

Add your environment-specific kubconfigs under kubConfigs like https://github.com/egovernments/DIGIT-DevOps/blob/release/config-as-code/environments/ci-demo-secrets.yaml#L50

KubeConfig environment name and deploymentJobs name from ci.yaml should be the same

Update the CIOps and DIGIT-DevOps repo ssh url with the forked repo's ssh url.

Make sure earlier created github users have read-only access to the forked DIGIT-DevOps and CIOps repos.

SSL certificate for the sub-domain.

Update the DOCKER_NAMESPACE with your docker hub organization name.

Update the repo name "egovio" with your docker hub organization name in buildPipeline.groovy

Remove the below env:

Jenkins is launched. You can access the same through your sub-domain configured in ci.yaml.

Set up DIGIT using HelmFile. You can still try it out and give us feedback.

This guide walks you through the steps required to set up DIGIT using helmfile.

git

Kubernetes Cluster

Helmfile is a declarative spec for deploying helm charts. It lets you…

Keep a directory of chart value files and maintain changes in version control.

Apply CI/CD to configuration changes.

Periodically sync to avoid skew in environments.

To avoid upgrades for each iteration of helm, the helmfile executable delegates to helm - as a result, helm must be installed.

Standardisation of Helm templates (Override specific parameters such as namespace)

To improve the Utilisation of Helm capabilities (Rollback)

Easy to add any open-source helm chart to your DIGIT stack

download one of the releases

run as a container

Archlinux: install via pacman -S helmfile

open SUSE: install via zypper in helmfile assuming you are on Tumbleweed; if you are on Leap you must add the kubic repo for your distribution version once before that command, e.g. zypper ar https://download.opensuse.org/repositories/devel:/kubic/openSUSE_Leap_\$releasever kubic

Windows (using scoop): scoop install helmfile

macOS (using homebrew): brew install helmfile

The Helmfile Docker images are available in GHCR. There is no latest tag, since the 0.x versions can contain breaking changes, so pick the right tag. Example using helmfile 0.156.0:

You can also use a shim to make calling the binary easier:

The helmfile init sub-command checks the dependencies required for helmfile operation, such as helm, helm diff plugin, helm secrets plugin, helm helm-git plugin, helm s3 plugin. When it does not exist or the version is too low, it can be installed automatically.

The helmfile sync sub-command syncs your cluster state as described in your helmfile. The default helmfile is helmfile.yaml, but any YAML file can be passed by specifying a --file path/to/your/yaml/file flag.

The helmfile apply sub-command begins by executing diff. If diff finds that there are any changes, sync is executed. Adding --interactive instructs Helm File to request your confirmation before sync.

The helmfile destroys sub-commands uninstalls and purges all the releases defined in the manifests. helmfile --interactive destroy instructs Helm File to request your confirmation before actually deleting releases.\

Update domain name in env.yaml

Update db password , flywaypassword, loginusername, loginpassword and git-sync private key in env-secrets.yaml

Note: Make sure the db_password and flywaypassword are same

Note

1. Generate SSH key pairs using the below method Using the online website (not recommended in a production setup. To be only used for demo setups): https://8gwifi.org/sshfunctions.jsp 2. Add the public key to your GitHub account - (reference: https://www.youtube.com/watch?v=9C7_jBn9XJ0&ab_channel=AOSNote )

Run the below command to install DIGIT successfully.

This guide outlines a deployment strategy for running containerized applications on Kubernetes, focusing on seamless database integration. It's suitable for teams looking to simplify their database setup using in-cluster PostgreSQL or externally managed database services.

By updating the Kubernetes deployment configuration, teams can easily switch from an in-cluster PostgreSQL database to a managed service. This move enhances scalability and reliability while reducing the operational overhead of database management.

Scalability and Reliability: Managed services offer superior scalability and reliability compared to in-cluster databases.

Reduced Operational Overhead: Outsourcing database management allows teams to concentrate on application development.

To integrate a managed PostgreSQL service, modify the following parameters in the

deploy-as-code/charts/environments/env.yaml configuration file:

db-host: Update with the database service host address.

db-name: Update with the specific database name.

db-url: Update with the complete database connection URL.

domain: Update domain name with your domain name

Update db password, db username, flyway username, flyway password, login username, login password and git-sync private key in env-secrets.yaml

Note: 1. Generate SSH key pairs using the below method Using the online website (not recommended in production setup. To be only used for demo setups): https://8gwifi.org/sshfunctions.jsp 2. Add the public key to your GitHub account - (reference: https://www.youtube.com/watch?v=9C7_jBn9XJ0&ab_channel=AOSNote )

Run the below command to install DIGIT successfully.

Please hit the below URL to login into the employee dashboard with SUPERUSER access

Log in with the user credentials which you have provided in the below file path

Tested Environment

This deployment approach has been thoroughly tested on an Amazon Web Services Elastic Kubernetes Service (AWS EKS) Cluster with Kubernetes version 1.28.\

Release chart helps to deploy the product specific modules in one click

This section of the document walks you through the details of how to prepare a new release chart for existing products.

Git

Install Visualstudio IDE Code for better code visualization/editing capabilities

Clone the following DIGIT-DevOps where we have all the release charts for you to refer.

Create a new release version of the below products.

Select your product, copy the previous release version file, and rename it with your new version.

The above code ensures the dependancy_chart-digit-v2.6.yaml with your new release version is copied and renamed.

Note: replace <your_release_version> with your new release version.

Navigate to the release file on your local machine. Open the file using Visualstudio or any other file editor.

Update the release version "v2.6" with your new release version.

Update the modules(core, business, utilities, m_pgr, m_property-tax,..etc) service images with new release service images.

Add new modules

name - add your module name with "m_demo" ideal format ie. "m" means module and "demo" would be your module name

dependencies - add your module dependencies (name of other modules)

services - add your module-specific new service images

This section of the document walks you through the details of how to prepare a new release chart for new products.

Git

GitHub Organization Account

Install Visualstudio IDE Code for better code visualization/editing capabilities

When you have a new product to introduce, you can follow the below steps to create the release chart for a new product.

eGov partners can follow the below steps:

Fork the DIGIT-DevOps repo to your GitHub organization account

Clone the forked DIGIT-DevOps repo to your local machine

git clone --branch release https://github.com/<your_organization_account_name>/DIGIT-DevOps.git

Note: replace this <your_organization_account_name> with your github organization account name.

Navigate to the product-release-charts folder and create a new folder with your product name. cd DIGIT-DevOps/config-as-code/product-release-charts mkdir <new_product_name>Note: replace <new_product_name> with your new product name.

Create a new release chart file in the above-created product folder.touch dependancy_chart-<new_product_name>-<release_version>.yaml1. Open your release chart file dependancy_chart-<new_product_name>-<release_version>.yaml and start preparing as mentioned in the below release template.

eGov users can follow the below steps:

Clone the forked DIGIT-DevOps repo to your local machine

git clone --branch release https://github.com/egovernments/DIGIT-DevOps.git

Navigate to the product-release-charts folder and create a new folder with your product name. cd DIGIT-DevOps/config-as-code/product-release-charts mkdir <new_product_name>Note: replace <new_product_name> with your new product name

Create a new release chart file in the above-created product folder.touch dependancy_chart-<new_product_name>-<release_version>.yaml1. Open your release chart file dependancy_chart-<new_product_name>-<release_version>.yaml and start preparing as mentioned in the below release template.

Installation guide for quick deployment of DIGIT via GitHub Actions in AWS. (This setup is strictly for setting up dev and test environments)

Before you begin with the installation:

ap-south-1 is hardcoded in terraform script. It will be moved to input.yaml shortly.

Secrets should be encrypted using SOPS. Currently, a private repository is needed to restrict access to sensitive information.

This guide provides step-by-step instructions for installing DIGIT using GitHub Actions within an AWS environment.

Secrets should be encrypted using SOPS. Currently, the private repo will be required to restrict access to sensitive information

AWS account with administrative privilege

Github account

Skip this step if you already have access and a secret key

Create an IAM User with administrative privilege in your AWS account

Generate ACCESS_KEY and SECRET_KEY for the IAM user.

Enable GitHub workflow by clicking on I understand my workflow, go ahead and enable them

Navigate to the repository settings, under the security section go to Secrets and Variables, click on actions and add the following repository secrets one by one by clicking on New repository secret:

The following secrets need to be added:

Once all four secrets are added it will look like the below:

Switch the branch from master to DIGIT-2.9LTS using the below command.

Choose the following method to generate an SSH key pair:

Method a: Use an online website (Note: This is not recommended for production setups, only for demo purposes): https://8gwifi.org/sshfunctions.jsp

Store the generated private key and public key in separate files on your local.

The private key will look like:

-----BEGIN RSA PRIVATE KEY----- MIIEpAIBAAKCAQEAue4+1*********************K7mGXRIv6enEP4lN/y9i287wsNBpg+IDGjIV************************************************************************************ +zrt79wBgG5vlGMoT1hysRDpxNNlDdimE6G8OHaCj6e5cwhXrMt1swKFUwVsZaFx UMv1xVFU/OsrJ8v8***************************************************************** **********************Sd74a4d2h28pIEHNbrlvAVn7Zt9IDC kgske+VBY+X0D2en1l8bt3Vdnn5xgcDQsPmp6GdoRfE2luJ6lAe+mdkCgYEA0wUj tUHRH9sI3X86wZVREt*************************************************************** **********************************poTy6hNQr9IT2TsBckuN/qqockBR/j+iRap7lec3tJM vdmMVP0Ed7GjBiSBVeHeHVg+Dt6+AqayWqU0hPkCgYB6o+bof7XnnsmBjvLVFO15 LlDiIZQFBtr7CriRDD2Nx************************************************************* ************************************TCaHk8CGmA+TXSKM9q7cTtMb6ythUQhZrpq 0EEY5TgQKBgQ*************************************************************8/PD+mT 5jFvon5Q== -----END RSA PRIVATE KEY-----

And the public key will look like the below:

ssh-rsa AAAAB3NzaC1yc2EAAAADAQA*************************************HBFUNjyMLpFltqwbsA*************************************MaMhX7Ou3*************************************PWHKx*************************************oVTBWxloXFQy/XFU*************************************W/QVdgs5xp+P5hhZgm9WpdN3Cz*************************************clYmUHoPCPwKIqElX2DZzYGJc*************************************y4gR

In your editor go to DIGIT-DevOps/infra-as-code/terraform/sample-aws.

Open input.yaml and enter details such as domain_name, cluster_name, bucket_name, db_name and add public_ssh_key generated in the above step. (Fill in the inputs as per the regex mentioned in the comments). The following variables need to be set in the input.yaml

Go to deploy-as-code/charts/environments.

Open env-secrets.yaml.

Enter db_password and ssh_private_key (in git-sync section). (please make sure that the indentation is the same as the sample value given for ssh_private_key)

After entering all the details, push these changes to the remote GitHub repository (in the same DIGIT-2.9LTS branch). Open the Actions tab in your GitHub account to view the workflow. You should see that the workflow has started, and the pipelines are completed successfully.

This indicates that your setup is correctly configured, and your application is ready to be deployed. Monitor the output of the workflow for any errors or success messages to ensure everything is functioning as expected.

If not create the profile using:

Run the below command to export AWS Credentials

Proceed only after verifying the correct configuration of your credentials. For any uncertainties on how to set up the credentials, consult the AWS documentation for detailed instructions. To check if credentials are properly set run the command:

Run the following command to get Kubernetes configuration:

Verify that you can connect to the cluster by running the following command.

Once the deployment is done get the CNAME of the nginx-ingress-controller:

The output of this will be something like this:

Add the CNAME to your domain provider against your domain name.

As you wrap up your work with DIGIT, ensuring a smooth and error-free cleanup of the resources is crucial. Regular monitoring of the GitHub Actions workflow's output is essential during the destruction process. Watch out for any error messages or signs of issues. A successful job completion will be confirmed by a success message in the GitHub Actions window, indicating that the infrastructure has been effectively destroyed.

When you're ready to remove DIGIT and clean up the resources it created, proceed with executing the terraform_infra_destruction job. This action is designed to dismantle all setup resources, clearing the environment neatly.

We hope your experience with DIGIT was positive and that this guide makes the uninstallation process straightforward.

To initiate the destruction of a Terraform-managed infrastructure, follow these steps:

Navigate to Actions.

Click DIGIT-Install workflow.

Select Run workflow.

When prompted, type "destroy". This action starts the terraform_infra_destruction job.

You can observe the progress of the destruction job in the actions window.

Note: For DIGIT configurations created using the master branch.

If DIGIT is installed from a branch other than the main one, ensure that the branch name is correctly specified in the workflow file. For instance, if the installation is done from the digit-install branch, the following snippet should be updated to reflect that.

Since there are many DIGIT services and the development code is part of various git repos, one needs to understand the concept of cicd-as-service which is open-sourced. This page guides you through the process of creating a CI/CD pipeline.

The initial steps for integrating any new service/app to the CI/CD are discussed below.

Once the desired service is ready for integration: decide the service name, type of service, and if DB migration is required or not. While you commit the source code of the service to the git repository, the following file should be added with the relevant details which are mentioned below:

Build-config.yml – It is present under the build directory in the repository

This file contains the below details used for creating the automated Jenkins pipeline job for the newly created service.

While integrating a new service/app, the above content needs to be added to the build-config.yml file of that app repository. For example: to onboard a new service called egov-test, the build-config.yml should be added as mentioned below.

If a job requires multiple images to be created (DB Migration) then it should be added as below,

Note - If a new repository is created then the build-config.yml is created under the build folder and the config values are added to it.

The git repository URL is then added to the Job Builder parameters

When the Jenkins Job => job builder is executed, the CI Pipeline gets created automatically based on the above details in build-config.yml. Eg: egov-test job is created in the builds/DIGIT-OSS/core-services folder in Jenkins since the “build-config is edited under core-services” And it should be the “master” branch. Once the pipeline job is created, it can be executed for any feature branch with build parameters - specifying the branch to be built (master or feature branch).

As a result of the pipeline execution, the respective app/service docker image is built and pushed to the Docker repository.

On repo provide read-only access to GitHub users (created while ci/cd deployment)

The Jenkins CI pipeline is configured and managed 'as code'.

Job Builder – Job Builder is a Generic Jenkins job which creates the Jenkins pipeline automatically which is then used to build the application, create the docker image of it and push the image to the Docker repository. The Job Builder job requires the git repository URL as a parameter. It clones the respective git repository and reads the build/build-config.yml file for each git repository and uses it to create the service build job.

Check and add your repo ssh URL in ci.yaml

If the git repository ssh URL is available, build the Job-Builder Job.

If the git repository URL is not available, check and add the same team.

The services are deployed and managed on a Kubernetes cluster in cloud platforms like AWS, Azure, GCP, OpenStack, etc. Here, we use helm charts to manage and generate the Kubernetes manifest files and use them for further deployment to the respective Kubernetes cluster. Each service is created as charts which have the below-mentioned files.

Note: The steps below are only for the introduction and implementation of new services.

To deploy a new service, you need to create a new helm chart for it( refer to the above example). The chart should be created under the charts/helm directory in the DIGIT-DevOps repository.

If you are going to introduce a new module with the help of multiple services, we suggest you create a new Directory with your module name.

Example.:-

You can refer to the existing helm chart structure here

This chart can also be modified further based on user requirements.

The deployment of manifests to the Kubernetes cluster is made very simple and easy. There are Jenkins Jobs for each state and are environment-specific. We need to provide the image name or the service name for the respective Jenkins deployment job.

The deployment Jenkins job internally performs the following operations:

Reads the image name or the service name given and finds the chart that is specific to it.

Generates the Kubernetes manifests files from the chart using the helm template engine.

Execute the deployment manifest with the specified docker image(s) to the Kubernetes cluster.

High-level overview of DIGIT deployment

DIGIT is an open-source, customizable platform that lends itself to extensibility. New modules can be built on top of the platform to suit new use-cases or existing modules can be modified or replaced. To enable this, in addition to deploying DIGIT, a CI/CD pipeline should be set up. CD/CI pipelines enable the end user to automate & simplify the build/deploy process.

DIGIT comes with configurable "CI as code", "Deploy as code" etc.. which can be utilized to set up the pipelines and deploy new modules. More on that in the steps below.

Note: Changing the DIGIT code has implications for upgrades. That is, you may not be able to upgrade to the latest version of DIGIT depending on the changes that have been made. New modules are generally not a problem for upgrades.

Find out more on kubernetes manifests: https://www.youtube.com/watch?v=ohSUtEfDefc

Learn how to manage env values, secrets of any service deployed in kubernetes https://www.youtube.com/watch?v=OW244LxB4oI

Explore how to port forward to a pod running inside k8s cluster and work locally https://www.youtube.com/watch?v=TT3nd5n5Yus

Find the SOPs to secure your keys/creds: https://www.youtube.com/watch?v=DWzJ87KbwxA

This guide provides step-by-step instructions for installing DIGIT. This is a preferable method for setting up production environment. You should be well versed with DevOps concepts.

Fork the Repository into your account on GitHub (Uncheck Copy the master branch only while forking) see the below image where to find a fork in GitHub.

Clone the forked DIGIT-DevOps repository (using git clone command) and open the repo in the code editor or optionally you can use by replicating github.com with github.dev.

Add the public key to your .

For guidance on setting up your AWS CLI, please follow the instructions provided in . Additionally, ensure your AWS CLI is correctly configured by referring to the official AWS documentation on Configuring the AWS CLI - AWS Command Line Interface. Confirm your AWS credentials are correctly set by executing:

Login to the employee dashboard with the username and password provided in file using the domain name provided in .

Login to https://<domain_name>/employee

This section contains the list of documents that explains the key concepts required for DIGIT deployment.

If you're deploying DIGIT for the first time, we recommend using the Helmfile documentation for guidance.

If you're already running DIGIT and want to deploy using Go exclusively, this document is your go-to reference.

Deployment using GithubActions

This document offers guidance on effortlessly setting up the infrastructure and deploying the DIGIT service with just a click.

AWS_ACCESS_KEY_ID

<GENERATED_ACCESS_KEY>

AWS_SECRET_ACCESS_KEY

<GENERATED_SECRET_KEY>

AWS_DEFAULT_REGION

<AWS_REGION>

AWS_REGION

<AWS_REGION>

cluster_name

Name of the EKS cluster. The Cluster name can have only lowercase alphanumeric characters and hyphens

ssh_key_name

The name of the ssh key. Can contain any alphanumeric character

public_ssh_key

The public ssh key generated in section above (Generate SSH Key Pair)

db_name

Name of the database. The name that you enter should contain only alphanumeric characters

db_username

Username of the root user. DB user name must contain only alphanumeric characters

domain_name

The domain url for the UI

terraform_state_bucket_name

Name to be given to S3 bucket which will be created in terraform. This bucket will store the terraform state.