Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

The Digital Urban Governance Platform

Building an urban governance platform to catalyze digital transformation across towns and cities - changing the way citizens interact with government bodies.

DIGIT Urban Stack is a set of Open APIs, services, and reference implementations, set up as a public good, to allow government entities, businesses, startups, and civil society to use a unique digital Infrastructure and build solutions for urban India at a large scale. It provides a set of open standards, specifications and documentation to create a level playing field and enable ecosystem players to innovate on the stack. As a public good, the platform is provided without profit or restriction to all members of society.

The urban mission offers digital governance solutions that encapsulate the core platform principles. The apps provide

Secure & reliable governance tools

Simple reusable modules for effective integration

Scalable & standardized solutions

Open APIs to promote interoperability

DIGIT-Urban focuses on inclusion and is designed on the principle of enhancing both platform openness and choice for citizens. The platform uses open APIs and standards, creating a powerful framework to drive convergence across the multiple systems currently in use and to lower the barrier to entry for locally-developed solutions.

Keeping in mind that most Indians use the internet through their phones, we follow and advocate a “mobile-first” approach, while supporting multi-channel access to accommodate diverse needs and preferences.

Open Source - DIGIT is Open Source and has been built using the best in class Open Source technology stacks powering the most advanced companies in the world. DIGIT is able to provide the lowest total cost of ownership and helps ensure that governments retain strategic control of their systems and data.

Mobile-Enabled - DIGIT has adopted a mobile-first approach, with robust mobile applications enabling citizens to easily access government services through their phones. Government officials and field workers are also empowered with mobile applications that enable them to deliver 24×7 governance with ease.

Real-time Dashboards - DIGIT’s real-time dashboards provide governments with actionable insights on demand. Administrators and department heads are empowered with verified data that enables them to manage their work and budgets better. Field-level employee reports enable effective performance management and ensure efficient usage of government resources.

All content on this page by is licensed under a .

Multiple channel support

Configurable building blocks that support customization at each stage

New release features, enhancements, and fixes

DIGIT 2.7 release has got new modules, a few functional changes, and non-functional changes.

Functional changes

National Dashboard for PT, TL, OBPS, PGR, W&S, mCollect, and Fire NOC;

State level DSS of OBPS, W&S, Fire NOC and mCollect;

PT UI/UX Audit Feedbacks, TL UI/UX Audit Feedbacks, PGR UI/UX Enhancements Audit Feedbacks;

PT common Search, PT lightweight create and integrate with TL;

Notification for different channels - W&S, PT, and TL;

Common UI/UX - Citizen Profile, Employee Profile;

Multi Tenancy Selection, Birth and Death module, State DSS and National Dashboard for Birth and Death

Non-functional changes

National Dashboard Ingest API and NUGP STQC Security fixes

All content on this page by is licensed under a .

National dashboard ingest API

NUGP STQC security fixes

Feature

Description

Common UI/UX - Citizen Profile, Employee Profile, and Multi Tenancy Selection

Edit User Profile - Citizen

Edit User Profile - Employee

Update Password - Employee

National Dashboard for PT, TL, OBPS, PGR, W&S, mCollect, and Fire NOC

Dashboard home screen shows module details

Dashboard overview screen shows revenue details and other common metric comparison for all modules

Individual dashboards offer comparative insights for multiple metrics for different states

Filter by date range, State , City

Table drilldowns for States→ City → Ward level information

State level DSS of OBPS, W&S, Fire NOC and mCollect

Updated dashboard home screen show module details

Updated dashboard overview screen show all module details

Individual dashboards created for W&S , OBPS , mCollect, and FireNoc

Birth and Death module, State DSS and National Dashboard for Birth and Death

Register birth & death records

View existing records

Search and download birth/ death certificates and receipts

PT common Search, PT lightweight create and integrate with TL

Search and link the existing property from other module applications

Create a limited information property to link and integrate with other applications

TL module integrated with property module

Updated Feature

Description

PGR UI/UX Enhancements

PGR workflow timeline enhancements

Upload PDF as attachments and other attachments related issues resolved

Notification for different channels - W&S, PT, and TL

For any specific user action, an SMS and email notifications are triggered as acknowledgment

SMS, event, and email notifications triggered to different channels

The application allows one to either send different messages across all channels based on their actions

PT UI/UX Audit Feedback

Search property by door number and owner name

Search application (employee)

My payments (citizen)

View payment history (from property details)

TL UI/UX Audit Feedback

Owner information card

Tag property while applying for trade license

Steppers in the citizen apply flow

UI/UX for revamp update Mobile number

Update mobile numbers in property in both citizen and employee portal

Non Functional

All content on this page by eGov Foundation is licensed under a Creative Commons Attribution 4.0 International License.

All content on this page by eGov Foundation is licensed under a Creative Commons Attribution 4.0 International License.

Learn how to setup DIGIT master data.

about , , , , , and .

This section contains documents and information required to configure the DIGIT platform

Learn how to configure the DIGIT Urban platform. Partner with us to enhance and integrate more into the platform.

Git ReposSetting up Master DataConfiguring ServicesConfiguring WorkflowsSetting Up eDCR ServiceConfiguration FAQsSetting up a LanguageDownload the file below to view the DIGIT Rollout Program Governance structure and process details.

All content on this page by is licensed under a .

DIGIT Infra and architecture details

DIGIT is India’s largest open-source platform for digital governance. It is built on OpenAPI (OAS 2.0) and provides API-based access to a variety of urban/municipal services enabling state governments and city administrators to provide citizen services with relevant new services and also integrating the existing system into the platform and run seamlessly on any commercial/on-prem cloud infrastructure with scale and speed.

DIGIT is a microservices-based platform that is built to scale. Microservices are small, autonomous and developer-friendly services that work together.

A big software or system can be broken down into multiple small components or services. These components can be designed, developed & deployed independently without compromising the integrity of the application.

Parallelism in development: Microservices architectures are mainly business-centric.

MicroServices have smart endpoints that process info and apply logic. They receive requests, process them, and generate a response accordingly.

MicroServices architecture allows its neighbouring services to function while it bows out of service. This architecture also scales to cater to its clients’ sudden spike in demand.

MicroService is ideal for evolutionary systems where it is difficult to anticipate the types of devices that may be accessing our application.

DIGIT follows Multilayer or n-tiered distributed architecture pattern. As seen in the illustration above there are different horizontal layers with some set of components eg. Data Access Layer, Infra Services, Business Services, different modules layers, client Apps and some vertical adapters. Every layer consists of a set of microservices. Each layer of the layered architecture pattern has a specific role and responsibility within the application.

Layered architecture increases flexibility, maintainability, and scalability

Multiple applications can reuse the components

Parallelism

Different components of the application can be independently deployed, maintained, and updated, on different time schedules

All content on this page by is licensed under a .

Human Resource Management System (HRMS) is a key module, a combination of systems and processes that connect human resource management and information technology through HR software. The HRMS module can be used for candidate recruiting, payroll management, leave approval, succession planning, attendance tracking, career progression, performance reviews, and the overall maintenance of employee information within an organization.

HRMS module enables users to -

Create User Roles

Create System Users

Employee Information Report

All content on this page by is licensed under a .

is an open-source system monitoring and alerting toolkit originally built at .

chart includes multiple components and is suitable for a variety of use-cases.

The default installation is intended to suit monitoring a kubernetes cluster the chart is deployed onto. It closely matches the kube-prometheus project.

service monitors to scrape internal kubernetes components

Layered architecture also makes it possible to configure different levels of security to different components

Layered architecture also helps users test the components independent of each other

kube-apiserver

kube-scheduler

kube-controller-manager

etcd

kube-dns/coredns

kube-proxy

With the installation, the chart also includes dashboards and alerts.

Deployment steps

Add environment variable to the respective env config file

Update the configs branch (like for qa.yaml added qa branch)

Add monitoring-dashboards folder to respective configs branch.

Enable the nginx-ingress monitoring and redeploy the nginx-ingress.

Add alertmanager secret in respective.secrets.yaml

If you want you can change the slack channel and other details like group_wait , group_interval and repeat_interval according to your values.

Deploy the prometheus-operator using go cmd or deploy using Jenkins.

To create a new panel in the existing dashboard

Login to dashboard and click on add panel

Set all required queries and apply the changes. Export the JSON file by clicking on t the save dashboard

Update the existing *-dashboard.json file from configs monitoring-dashboards folder with a newly exported JSON file.

All content on this page by eGov Foundation is licensed under a Creative Commons Attribution 4.0 International License.

State Data Centres with On-Premise Kubernetes Clusters

Running Kubernetes on-premise gives a cloud-native experience or SDC becomes cloud-agnostic when it comes to the experience of Deploying DIGIT.

Whether States have their own on-premise data centre, have decided to forego the various managed cloud solutions, there are few things one should know when getting started with on-premise K8s.

One should be familiar with Kubernetes and one should know that the control plane consists of the Kube-apiserver, Kube-scheduler, Kube-controller-manager and an ETCD datastore. For managed cloud solutions like Google’s Kubernetes Engine (GKE) or Azure’s Kubernetes Service (AKS) it also includes the cloud-controller-manager. This is the component that connects the cluster to the external cloud services to provide networking, storage, authentication, and other feature support.

To successfully deploy a bespoke Kubernetes cluster and achieve a cloud-like experience on SDC, one need to replicate all the same features you get with a managed solution. At a high-level this means that we probably want to:

Automate the deployment process

Choose a networking solution

Choose a storage solution

Handle security and authentication

Let us look at each of these challenges individually, and we’ll try to provide enough of an overview to aid you in getting started.

Using a tool like an ansible can make deploying Kubernetes clusters on-premise trivial.

When deciding to manage your own Kubernetes clusters, we need to set up a few proof-of-concept (PoC) clusters to learn how everything works, perform performance and conformance tests, and try out different configuration options.

After this phase, automating the deployment process is an important if not necessary step to ensure consistency across any clusters you build. For this, you have a few options, but the most popular are:

****: a low-level tool that helps you bootstrap a minimum viable Kubernetes cluster that conforms to best practices

: an ansible playbook that helps deploy production- ready clusters

If you already using ansible, kubespray is a great option otherwise we recommend writing automation around kubeadm using your preferred playbook tool after using it a few times. This will also increase your confidence and knowledge in the tooling surrounding Kubernetes.

When designing clusters, choosing the right container networking interface (CNI) plugin can be the hardest part. This is because choosing a CNI that will work well with an existing network topology can be tough. Do you need BGP peering capabilities? Do you want an overlay network using vxlan? How close to bare-metal performance are you trying to get?

There are a lot of articles that compare the various CNI provider solutions (calico, weave, flannel, kube-router, etc.) that are must-reads like the article. We usually recommend Project Calico for its maturity, continued support, and large feature set or flannel for its simplicity.

For ingress traffic, you’ll need to pick a load-balancer solution. For a simple configuration, you can use MetalLB, but if you’re lucky enough to have F5 hardware load-balancers available we recommend checking out the . The controller supports connecting your network plugin to the F5 either through either vxlan or BGP peering. This gives the controller full visibility into pod health and provides the best performance.

Kubernetes provides a number of . If you’re going on-premise you’ll probably want to use network-attached storage (NAS) option to avoid forcing pods to be pinned to specific nodes.

For a cloud-like experience, you’ll need to add a plugin to dynamically create persistent volume objects that match the user’s persistent volume claims. You can use dynamic provisioning to reclaim these volume objects after a resource has been deleted.

Pure Storage has a great example helm chart, the , that provides smart provisioning although it only works for Pure Storage products.

As anyone familiar with security knows, this is a rabbit-hole. You can always make your infrastructure more secure and should be investing in continual improvements.

Including different Kubernetes plugins can help build a secure, cloud-like experience for your users

When designing on-premise clusters you’ll have to decide where to draw the line. To really harden your cluster’s security you can add plugins like:

: provides the underlying secure communication channel, and manages authentication, authorization, and encryption of service communication at scale

: is a user-space kernel, written in Go, that implements a substantial portion of the Linux system surface

: secure, store and tightly control access to tokens, passwords, certificates, encryption keys for protecting secrets and other sensitive data

For user authentication, we recommend checking out which will integrate with an existing authentication provider. If you’re already using Github teams to then this could be a no-brainer.

Hope this has given you a good idea of deploying, networking, storage, and security for you to take the leap into deploying your own on-premise Kubernetes clusters. Like we mentioned above, the team will want to build proof-of-concept clusters, run conformance and performance tests, and really become experts on Kubernetes if you’re going to be using it to run DIGIT on production.

We’ll leave you with a few other things the team should be thinking of:

Externally backing up Kubernetes YAML, namespaces, and configuration files

Running applications across clusters in an active-active configuration to allow for zero-downtime updates

Running game days like deleting the CNI to measure and improve time-to-recovery

All content on this page by is licensed under a .

A good/meaningful logging system is a system that everyone can use and understand. How Digit Logging is configured.

The logging concern is one of the most complicated parts of our microservices. Microservices should stay as pure as possible. So, we shouldn’t use any library if we can (like logging, monitoring, resilience library dependencies). It means, every dependency can change any time and then usually, we must do that change for the other microservices. There is a lot of work here. Instead of that, we need to handle these dependencies with a more generic way. For logging, the way is the stdout logging. For most of the programming languages, logging to stdout is the default way and probably no additional change required at the beginning.

In MSA, services interact with each other through an HTTP endpoint. End users only know about API Contract (Request/Response), and don’t know how exactly do services work.

“A service” will call “B service” and “C service”. Once the request chain is complete, “X service” might be able to respond to the end-user who initiated the request. Let’s say you already have a logging system that captures error logs for each service. If you find an error in “X service”, it would be better if you know exactly whether the error was caused by “A service” or “C service”. If the error is informative enough for you. But if that isn’t the case, the correct way to reproduce that error is to know all requests and services that involved. Once you implement Correlation Id, you only need to look for that ID in the logging system. And you will get all logs from services that were part of the main request to the system.

The application usually adds more features as time goes by. Go along with this, there are so many services will be created new (my project started with 12 services, and now we have 20). These services could be hosted on different servers. Let’s imagine, what will happen if you store logging on different servers? — you will have to access to each individual server to read logs, then trying to correlate problems. Instead, you have everything that you need in one dashboard by centralized logging data in one place. If would save your time so much.

Applying MSA allows you to use different technology stacks for each service. For example, you can use .Net Core for Buy service, Java for Shipping service and Python for Inventory service. However, it also impacts to log format of each service. It’s even more complicated as some logs need more fields than others.

Based on my experience, I’d like to suggest JSON as a standard format for logging data. JSON allows you to have multiple levels for your data so that, when necessary, you can get more semantic info in a single log event.

When we see the log one would want to know everything! What? When? Where?… even Who? — don’t think that we need to know exactly which person causes the problem to blame them :) Because, contacting the right person also helps you to resolve issues quicker. You can log all the data that you get. However, let us give some specific fields. This might help to figure out what really need to log.

When? — Time (with full date format): It doesn’t require using UTC format. But the timezone has to be the same for everyone that needs to look at the logs.

What? — Stack errors: All exception objects should be passed to the logging system.

Where? — Besides service name as we using MSA. We also need function name, class or file name where the error occurred. — Don’t guess anything, it might waste your time.

Bear in mind that, logging system is not only for developers. It’s also used by others (system admin, tester…) So, you should consider logging data that everyone can use and understand.

Sometimes, you log requests from end-users that contain PII. We need to be careful, it might violate .

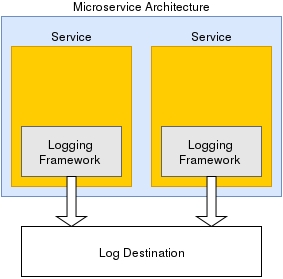

There are two techniques for logging in MSA. Each service will implement the logging mechanism by itself and using one logging service for all services. Both of them have Good and Not Good points. — I’m using both these approaches in my project.

Implement Logging in each service

With this approach, we can easily define the logging strategy/library for each service. For example, with service written by java we can use Log4j.

The problem with this approach is that it requires each service to implement its own logging methods. Not only is this redundant, but it also adds complexity and increases the difficulty of changing logging behaviour across multiple services.

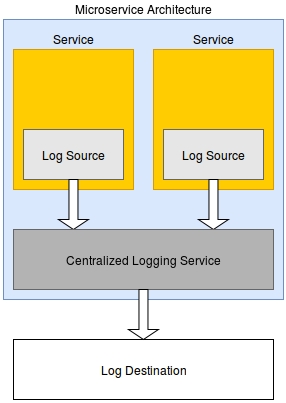

2. Implement central Logging service

If you don’t want to implement logging in each service separately. You can consider implementing a central service for logging. This service will help you with processing, formatting and storing log data.

This approach might help to reduce the complexity of your application. However, you might get lost your log data if that service is down.

All content on this page by is licensed under a .

At times in the different modules, there is a need to capture the address of the user’s place of residence or where the person is doing a trade, for which the user has to enter his/her full address which creates a task. In order to simplify the process, we can have google map geolocation service in place which would help us get the exact coordinates of the place on the map and help us identify the place.

This service is paid and the client has to purchase the below items:

Note:

Download the data template attached to this page.

Get a good understanding of all the headers in the template sheet, their data type, size, and definitions by referring to the ‘Data Definition’ section of this document.

In case of any doubt, please reach out to the person who has shared this template with you to discuss and clear your doubts.

Ask the clients to purchase the above-mentioned APIs in the Introduction section.

The checklist is a set of activities to be performed one the data is filled into a template to ensure data type, size, and format of data is as per the expectation. These activities have been divided into 2 groups as given below.

This checklist covers all the activities which are common across the entities.

Not Applicable

All content on this page by is licensed under a .

go run main.go deploy -e -c 'prometheus-operator,grafana,prometheues-kafka-exporter'

Alphanumeric

64

Yes

The key which the google would provide after the purchase for the API has been done

Get the details for the API URL and key from the client.

Verify the data once again by going through the checklist and making sure that each and every point mentioned in the checklist is covered.

1458-ASD785-987722

Google API URL

Alphanumeric

64

Yes

The URL of the API that is being purchased

2.

1

Make sure that each and every point in this reference list has been taken care of

API Key

Following is the table through which the information can be shared.

192.78.98.12

Since all state governments/clients prefer to host the websites on their servers, this activity is ideally done by them.

Domain Name

Alphanumeric

253

Yes

The name/address of the website being used to access the website/ module

Following are the steps which are to be followed:

Download the data template attached to this page.

Get a good understanding of all the headers in the template sheet, their data type, size, and definitions by referring to the ‘Data Definition’ section of this document.

In case of any doubt, please reach out to the person who has shared this template with you to discuss and clear your doubts.

If the state agrees to host the website on their server, provide them with the 2 columns mentioned in the attached template.

If the state disagrees to host on their server, then a domain name has to be purchased by any of the external vendors and the EXTERNAL-IP address has to be mapped with them.

Verify the data once again by going through the checklist and making sure that each and every point mentioned in the checklist is covered.

This checklist covers all the activities which are common across the entities.

1

Make sure that each and every point in this reference list has been taken care of.

This checklist covers the activities which are specific to the entity:

No mistake should be done in providing the EXTERNAL-IP address

-

2.

Only one domain name and its corresponding IP address have to be provided

-

All content on this page by eGov Foundation is licensed under a Creative Commons Attribution 4.0 International License.

A user role defines permissions for users to perform a group of tasks. In a default application installation, there are some predefined roles with a predefined set of permissions. Each role has a certain number set of tasks it is allowed to perform and these roles are Super Admin, Trade License Approver, Data Entry Admin and Trade License document verifier etc.

1

TL_APPROVER

TL Approver

Trade License Approver

2

GRO

Grievance Routing Officer

Grievance Routing Officer

3

1

Code

Alphanumeric

64

Yes

A unique code that identifies the user role name.

2

Download the data template attached to this page.

Have it open and go through all the headers and understand the meaning of them by referring 'Data Definition' section.

Make sure all the headers, its data type, field size and its definition/ description is understood properly. In case of any doubt, please reach out to the person who has shared this document with you to discuss the same and clear out the doubts.

Identify all different types of user roles on the basis of ULB’s functions.

Start filling the data starting from serial no. and complete a record at once. repeat this exercise until the entire data is filled into a template.

Verify the data once again by going through the checklist and taking care of each and every point mentioned in the checklist.

The checklist is a set of activities to be performed once the data is filled into a template to ensure data type, size, and format of data is as per the expectation. These activities have been divided into 2 groups as given below.

This checklist covers all the activities which are common across the entities.

1

Make sure that each and every point in this reference list has been taken care of

This checklist covers the activities which are specific to the entity.

1

The Code should be alphanumeric and unique

TL_APPROVER, GRO

2

The Name should not contain any special characters

TL Approver : [Allowed]

#TL Approver! : [Not allowed]

All content on this page by eGov Foundation is licensed under a Creative Commons Attribution 4.0 International License.

Knowledge of json and how to write a json is required.

Knowledge of MDMS is required.

User with permission to edit the git repository where MDMS data is configured.

For the login page city name selection is required. Tenant added in MDMS shows in city drop-down of the login page.

In reports or in the employee inbox page the details related to ULB is displayed from the fetched ULB data which is added in MDMS.

Modules i.e., TL, PT, MCS can be enabled based on the requirement for the tenant.

After adding the new tenant, the MDMS service needs to be restarted to read the newly added data.

Tenant is added in tenant.json. In MDMS, file tenant.json, under tenant folder holds the details of state and ULBs to be added in that state.

Localization should be pushed for ULB grade and ULB name. The format is given below.

Localization for ULB Grade

Localization for ULB Name

Format of localization code for tenant name <MDMS_State_Tenant_Folder_Name>_<Tenants_Fille_Name>_<Tenant_Code> (replace dot with underscore)

Boundary data should be added for the new tenant.

tenant json file

content

All content on this page by eGov Foundation is licensed under a Creative Commons Attribution 4.0 International License.

EXTERNAL-IP

Alphanumeric

32

Yes

It is the IP address that has to be mapped to the domain name

{

"tenantId": "uk", //<ReplaceWithDesiredTenantId>

"moduleName": "tenant",

"tenants": [ {

"code": "uk.citya", //<state.ulbname>

"name": "City A", //<name of the ulb>

"description": "City A", //<ulb description>

"logoId": "https://s3.ap-south-1.amazonaws.com/uk-egov-assets/uk.citya/logo.png", //<ulb logo path - To display ulb logo on login>

"imageId": null,

"domainUrl": "", //<ulb website url>

"type": "CITY",

"twitterUrl": null,

"facebookUrl": null,

"emailId": "[email protected]", //<ulb email id>

"OfficeTimings": {

"Mon - Sat": "10.00 AM - 5.00 PM"

},

"city": {

"name": "City A",

"localName": null,

"districtCode": "CITYA",

"districtName": null,

"regionName": null,

"ulbGrade": "Municipal Corporation",

"longitude": 78.0322,

"latitude": 30.3165,

"shapeFileLocation": null,

"captcha": null,

"code": "248430"

},

"address": "City A Municipal Cornoration Address",

"contactNumber": "91 (135) 2653572"

}]}{

"code": "ULBGRADE_MUNICIPAL_CORPORATION",

"message": "MUNICIPAL CORPORATION",

"module": "rainmaker-common",

"locale": "en_IN"

}{

"code": "TENANT_TENANTS_UK_HALDWANI",

"message": "Haldwani",

"module": "rainmaker-tl",

"locale": "en_IN"

}CSR

Customer Support Representative

An employee who files and follows up complaints on behalf of the citizen

Name

Text

256

Yes

The Name indicates the User Role while creating an employee a role can be assigned to an individual employee

3

Description

Text

256

No

A short narration provided to the user role name

Key configurations at the state level include -

All content on this page by is licensed under a .

All content on this page by eGov Foundation is licensed under a Creative Commons Attribution 4.0 International License.

This page provides information on how to deploy DIGIT services on Kubernetes, prepare deployment manifests for various services along with its configurations, secrets. etc. It also discusses the maintenance of environment-specific changes.

All content on this page by is licensed under a .

This section addresses the key areas of concern and its potential remedial steps.

All content on this page by is licensed under a .

Summary of DIGIT OpenSource GitRepos and it's purpose. If you are a partner/contributor you may choose to fork or clone depending on need and capacity.

All content on this page by is licensed under a .

DIGIT environment setup is conducted at two levels.

FSM 1.1 is a release that has a few functional changes.

Functional: Pre-pay and post-pay service, multi-trip, capturing gender Information, desludging request flow enhancements, FSTPO vehicle log flow enhancements, DSO flow enhancements, and FSM generic enhancements.

A workflow process is a series of sequential tasks that are carried out based on user-defined rules or conditions, to execute a business process. It is a collection of data, rules, and tasks that need to be completed to achieve a certain business outcome.

In DIGIT, workflow for a business process is divided into three units out of which two are completely configurable while the remaining is fixed and lays the foundation of the other two.

This is the first unit which defines the actions and its nature which are basically executed during the workflow process by the workflow actors. It plays the foundation and configurable in nature as per the ground needs.

This is the second unit which defines the number of steps a workflow process may have and then trigger the creation role for each and every step with appropriate rights to perform a set of actions at each step. It is completely configurable.

This is the third unit which defines the workflow process including the steps, roles with actions and the present, next and previous state of a step/level of the workflow process. It is completely configurable.

All content on this page by is licensed under a .

The Decision Support System in DIGIT platform can be configured to provide customized insights and statistics on the dashboard. This section offers information on how to configure the DSS parameters for maximized efficiency.

All content on this page by eGov Foundation is licensed under a Creative Commons Attribution 4.0 International License.

DSO flow enhancements

DSO can edit pit and property usage details

Show validation message for DSO phone number on login

FSM generic enhancements

Add owner attribute for vehicle

Add ULB contact details in the FSM application flow

Citizen gives feedback on the number of trips - Configuration

Number of trips in FSM price calculation

N/A

Pre pay and Post pay service

Add citizen's choice for payment

Workflow changes (Desludging Application and Vehicle Trip)

Employee Flow Enhancements

Post Pay service: DSO creates trip

Multi-trip

Multi-trip facility

Add payment selection for DSO

FSTPO flow for multi-trip

Number of trips in FSM price calculation

Capturing gender information

Capture citizen gender on application

Capture DSO and FSTPO gender

Show citizen gender on FSM DSS

Desludging request flow enhancements

Select vehicle capacity instead of vehicle make.

Citizen Notifications | Payment Options | Timeline Enhancements

FSTPO vehicle log flow enhancements

FSTPO Vehicle Log Inbox Enhancements

FSTPO can decline the vehicle trip

FSTPO flow for Multi trip

National Informatica Cloud

Details coming soon...

All content on this page by eGov Foundation is licensed under a Creative Commons Attribution 4.0 International License.

An Urban Local Body (ULB) is defined as a tenant. The information which describes the various attributes of a ULB is known as tenant information. This detail is required to add the ULB into the system.

Download the data template attached to this page.

Have it open and go through all the headers and understand the meaning as given in this document under section 'Data Definition'.

Make sure all the headers, its data type, field size and its definition/ description are understood properly.

In case of any doubt, please reach out to the person who has shared this document with you and discuss the same to clear out the doubts.

The checklist is a set of activities to be performed once the data is filled into a template to ensure data type, size, and format of data is as per the expectation. These activities have been divided into 2 groups as given below.

This checklist covers all the activities which are common across the entities.

To see common checklist refer to the page consisting of all the activities which are to be followed to ensure completeness and quality of data.

This checklist covers the activities which are specific to the entity. There are no checklist activities exists which are specific to the entity.

All content on this page by is licensed under a .

Point of Sales (POS) machine is a machine that helps in handling transaction processing. This machine accepts and verifies the payments which are made by citizens for prevailing the services of DIGIT.

POS facilitates a middleware app developed in order to verify the payment process between the DIGIT module and the payment.

In this case, no data is required from the state team.

Not applicable.

Not applicable.

Not applicable.

Not applicable.

All content on this page by is licensed under a .

Master data templates allow users to configure the key parameters and details required for the effective functioning of the modules. This section offers comprehensive information on how to configure the master data templates for each module.

The individual master data templates for specific modules are availed in the Product & Modules section of our docs. Click on the links given below to navigate to view the specific module setup details.

Property Tax Master Data Templates

Trade License Master Data Templates

Water Charges Master Data Templates

Sewerage Charges Master Data Templates

mCollect Master Data Templates

All content on this page by is licensed under a .

This section contains a list of documents elaborating on the key concepts aiding the deployment of the DIGIT platform.

All content on this page by eGov Foundation is licensed under a Creative Commons Attribution 4.0 International License.

The Birth and Death module provides a digital interface, allowing citizens to search for and download the Birth and Death certificate. Also, the correctness of the certificate can be verified by scanning the QR code. The module enables the following:

Reduced physical touchpoints as citizens are not required to visit offices

All content on this page by is licensed under a .

Sonepur

Banka

BN47

Bihar

BBD47

24.8874° N

86.9198° E

Alphanumeric

64

Yes

It is a unique identifier which is assigned to each ULB. LGD (Local Government Directory) has already assigned a code urban local bodies and the same is used here

3

ULB Grade

Alphanumeric

64

Yes

Grade of ULB. e.g. Corporation, Municipality, Nagar Panchayat etc

4

City Name

Text

256

Yes

Name of city/ town which is covered by the ULB. E.g. Kannur/ Saptarishi

5

City Local Name

Text

256

No

Name of the city in the local language. e.g Telugu, Hindi etc

6

District Name

Text

256

Yes

Name of the District where the city is situated

7

District Code

Alphanumeric

64

Yes

It is a unique identifier which is assigned to each district. LGD (Local Government Directory) has already assigned code districts and the same is used here

8

Region Name

Text

256

No

Name of the region the listed district belongs to

9

Region Code

Alphanumeric

64

No

Unique code of the region to uniquely identify it

10

Contact Number

Alphanumeric

10

Yes

Contact person phone no. of ULB

11

Address

Text

256

Yes

Postal address of the ULB for the correspondence

12

ULB Website

Alphanumeric

256

Yes

URL address of the website for the ULB

13

Email Address

Alphanumeric

64

No

Email of the address of ULB where the email from the citizen can be received

14

Latitude

Alphanumeric

64

No

Latitude part of coordinates of the centroid of the city

15

Longitude

Alphanumeric

64

No

Longitude part of coordinates of the centroid of the city

16

GIS Location Link

Text

NA

No

GIS Location link of the ULB

17

Call Center No

Alphanumeric

10

No

Call centre contact number of ULB

18

Facebook Link

Text

NA

No

Face book page link of ULB

19

Twitter Link

Text

NA

No

Twitter page link of the ULB

20

Logo file Path

Document

NA

Yes

URL of logo file path to download the logo of ULB

Start filling the data starting from serial no. and complete a record at once. repeat this exercise until the entire data is filled into a template.

Verify the data once again by going through the checklist by taking care of each and every point mentioned in the checklist.

1

Sonepur Nagar Panchayat

47

Corp

98362532657

Main Hall, Sonepur

1

ULB Name

Text

256

Yes

Name of ULB. E.g. Kannur Municipal Corporation/ Saptarishi Municipal Council

2

Sonepur

ULB Code

Reduced time with the instantaneous download of certificates

Verification of the correctness of the certificate by scanning the QR Code

The Birth & Death module also enables ULB employees to create new registrations and also search for any previous applications through the employee side interface of the module.

In addition to the module, the DSS(Decision Support System) at the state level and national level instances enables administrators to view the records and have an aggregate view of the certificate downloads and other metrics for research and analysis purposes. The demographic data of the birth and death records also helps administrators to detect anomalies and make data-driven decisions.

In this release of the Birth & Death module, the following modules were developed

Birth & Death Module

Employee side interface

Citizen side interface

User access for

Birth record creator - Can only create new applications

Death record creator - Can only create new applications

Birth record viewer - Can only search and view applications

Death record viewer - Can only search and view applications

Birth & Death State DSS

Birth & Death National DSS

For an employee, the B&D module gives access to create birth records and death applications based on the registration details that they receive from the hospital, morgue, or from concerned authorities. They can also search the existing records of birth and death registrations based on date ranges, view them in tabular forms, and take printouts.

For a citizen, the Birth & Death module offers the convenience of downloading birth and death certificates using a simple search interface. They can also make payments if required for downloading the certificates, view past records and payments, and also download payment receipts.

The Birth & Death module shows the aggregated values for the certificate downloads and payment collections on the overview page of state DSS. Furthermore, in the individual module page, it shows

Distinct birth and death tabs that display metrics and charts for each segment

Number of certificate downloads and collection from payments

Certificate download trend across time

Chart showing certificate downloads by channel

Number of delayed registrations where the date of registration and date of birth/death differs by more than a year

Chart showing births and deaths by gender

Chart showing deaths by age category

The module also has the capability to show the ULB and ward-wise drill down for the delayed registrations and downloads by channel

The Birth & Death module shows the aggregated values of the certificate downloads and payment collections on the overview page of the National DSS. The application numbers and collection amounts are also aggregated and shown in the Total Collection and Total applications metrics on the Landing Page. Furthermore, in the individual module page, it shows

Distinct birth and death tabs that display metrics and charts for each segment

Number of certificate downloads and collection from payments

Certificate download trend across time

Chart showing certificate downloads by channel

Number of delayed registrations where the date of registration and date of birth/death differs by more than a year

Chart showing births and deaths by gender

Chart showing deaths by age category

The module also has the capability to show the ULB and ward-wise drill down for the delayed registrations and downloads by channel

None.

Birth & Death Report

User roles for - Birth Report viewer, Death Report Viewer

Doc Links

Description

This page is intended to help stakeholders as given below on data gathering activities.

State Team

eGov Onsite Team/ Implementation Team

ULB Team (Nodal and DEO)

Implementation Partners

The artefacts of this document are the data template of a configurable entity, a page with content defining the entity template and helping on how to fill the template with required data.

All content on this page by eGov Foundation is licensed under a Creative Commons Attribution 4.0 International License.

States and ULBs can configure their web portal to deploy the DIGIT portal effectively. State-level and ULB level web portal configuration details are covered in this section.

State PortalULB PortalAll content on this page by eGov Foundation is licensed under a Creative Commons Attribution 4.0 International License.

Looking at these requirements for a DevOps engineer, it is pretty clear that one should have a variety of skills to manage DIGIT DevOps.

Anyone involved in hiring DevOps engineers will realize that it is hard to find prospective candidates who have all the skills listed in this section.

Ultimately, the skill set needed for an incoming DevOps engineer would depend on the current and short-term focus of the operations team. A brand new team that is rolling out a new software service would require someone with good experience in infrastructure provisioning, deployment automation, and monitoring. A team that supports a stable product might require the service of an expert who could migrate home-grown automation projects to tools and processes around standard configuration management and continuous integration tools.

DevOps practice is a glue between engineering disciplines. An experienced DevOps engineer would end up working in a very broad swath of technology landscapes that overlaps with software development, system integration, and operations engineering.

SSL is Secure Sockets Layer is an encryption-based network security protocol developed for the assurance of privacy, authenticity and data integrity in internet communications.

Ideally, the domain name configuration and the SSL certification are obtained consecutively without fail from the state’s IT team.

No data is needed from the state team for this.

eGovernments Foundation transforms urban governance with the use of scalable and replicable technology solutions that enable efficient and effective municipal operations, better decision making, and contact-less urban service delivery.

Our comprehensive software products enable Governments to put their resources to efficient use by minimising overheads. We also help bring in transparency, accountability and citizen centricity in the delivery of Government services.

eGovernments Foundation has been in the forefront of implementing eGovernance solutions since 2003. Our products have been serving over 325 ULBs across the country. Our time tested products have impacted the ULBs in a large way. We have also been involved in several eGovernance initiatives in the country.

Our primary business motivator is to increase the footprint of eGovernance across the country and help adoption in as many ULBs as possible. Going opensource with our products is a measure in this direction. It also gives us the ability to tap into the immense talent pool in India for strengthening and improving our cities. Open source also blends well with our ethical fabric of being open and transparent in our business.

Birth record editor - Can edit existing applications

Death record editor - Can edit existing applications

DSS viewer - View DSS

An experienced DevOps engineer would be able to describe most of the technologies that is described in the following sections. This is a comprehensive list of DevOps skills for comparing one’s expertise and a reference template for acquiring new skills.

In theory, a template like this should be used only for assessing the current experience of a prospective hire. The needed skills can be picked up on the jobs that demand deep knowledge in certain areas. Therefore, the focus should be to hire smart engineers who have a track record of picking up new skills, rolling out innovative projects at work, and contributing to reputed open-source projects.

A DevOps engineer should have a good understanding of both classic (data centre-based) and cloud infrastructure components, even if the team has a dedicated infrastructure team.

This involves how real hardware (servers and storage devices) are racked, networked, and accessed from both the corporate network and the internet. It also involves the provisioning of shared storage to be used across multiple servers and the methods available for that, as well as infrastructure and methods for load balancing.

Hypervisors.

Virtual machines.

Object storage.

Running virtual machines on PC and Mac (Vagrant, VMWare, etc.).

Cloud infrastructure has to do with core cloud computing and storage components as they are implemented in one of the popular virtualization technologies (VMWare or OpenStack). It also involves the idea of elastic infrastructure and options available to implement it.

Network layers

Routers, domain controllers, etc.

Networks and subnets

IP address

VPN

DNS

Firewall

IP tables

Network access between applications (ACL)

Networking in the cloud (i.e., Amazon AWS)

Load balancing infrastructure and methods

Geographical load balancing

Understanding of CDN

Load balancing in the cloud

A DevOps engineer should have experience using specialized tools for implementing various DevOps processes. While Jenkins, Dockers, Kubernetes, Terraform, Ansible, and the like are known to most DevOps guys, other tools might be obscure or not very obvious (such as the importance of knowing one major monitoring tool in and out). Some tools like, source code control systems, are shared with development teams.

The list here has only examples of basic tools. An experienced DevOps engineer would have used some application or tool from all or most of these categories.

Expert-level knowledge of an SCM system such as Git or Subversion.

Knowledge of code branching best practices, such as Git-Flow.

Knowledge of the importance of checking in Ops code to the SCM system.

Experience using GitHub.

Experience using a major bug management system such as Bugzilla or Jira.

Ability to have a workflow related to the bug filing and resolution process.

Experience integrating SCM systems with the bug resolution process and using triggers or REST APIs.

Knowledge of Wiki basics.

Experience using MediaWiki, Confluence, etc.

Knowledge of why DevOps projects have to be documented.

Knowledge of how documents were organized on a Wiki-based system.

Experience building on Jenkins standalone, or dockerized.

Experience using Jenkins as a Continuous Integration (CI) platform.

CI/CD pipeline scripting using groovy

Experience with CI platform features such as:

Integration with SCM systems.

Secret management and SSH-based access management.

Scheduling and chaining of build jobs.

Source-code change based triggers.

Should know what artefacts are and why they have to be managed.

Experience using a standard artefacts management system such as Artifactory.

Experience caching third-party tools and dependencies in-house.

Should be able to explain configuration management.

Experience using any Configuration Management Database (CMDB) system.

Experience using open-source tools such as Cobbler for inventory management.

Ability to do both agent-less and agent-driven enforcement of configuration.

Experience using Ansible, Puppet, Chef, Cobbler, etc.

Knowledge of the workflow of released code getting into production.

Ability to push code to production with the use of SSH-based tools such as Ansible.

Ability to perform on-demand or Continuous Delivery (CD) of code from Jenkins.

Ability to perform agent-driven code pull to update the production environment.

Knowledge of deployment strategies, with or without an impact on the software service.

Knowledge of code deployment in the cloud (using auto-scaling groups, machine images, etc.).

Knowledge of all monitoring categories: system, platform, application, business, last-mile, log management, and meta-monitoring.

Status-based monitoring with Nagios.

Data-driven monitoring with Zabbix.

Experience with last-mile monitoring, as done by Pingdom or Catchpoint.

Experience doing log management with ELK.

Experience monitoring SaaS solutions (i.e., Datadog and Loggly).

To get an automation project up and running, a DevOps engineer builds new things such as configuration objects in an application and code snippets of full-blown programs. However, a major part of the work is glueing many things together at the system level on the given infrastructure. Such efforts are not different from traditional system integration work and, in my opinion, the ingenuity of an engineer at this level determines his or her real value on the team. It is easy to find cookbooks, recipes, and best practices for vendor-supported tools, but it would take experience working on diverse projects to gain the necessary skill set to implement robust integrations that have to work reliably in production.

Important system-level tools and techniques are listed here. The engineer should have knowledge about the following.

Users and groups on Linux.

Use of service accounts for automation.

Sudo commands, /etc/sudoers files, and passwordless access.

Using LDAP and AD for access management.

Remote access using SSH.

SSH keys and related topics.

SCP, SFTP, and related tools.

SSH key formats.

Managing access using configuration management tools.

Use of GPG for password encryption.

Tools for password management such as KeePass.

MD5, KMS for encryption/decryption.

Remote access with authentication from automation scripts.

Managing API keys.

Jenkins plugins for password management.

Basics of compilers such as node.js and Javac.

Make and Makefile, npm, Maven, Gradle, etc.

Code libraries in Node, Java, Python, React etc.

Build artefacts such as JAR, WAR and node modules.

Running builds from Jenkins.

Packaging files: ZIP, TAR, GZIP, etc.

Packaging for deployment: RPM, Debian, DNF, Zypper, etc.

Packaging for the cloud: AWS AMI, VMWare template, etc.

Use of Packer.

Docker and containers for microservices.

Use of artefacts repository: Distribution and release of builds; meeting build and deployment dependencies

Serving artefacts from a shared storage volume

Mounting locations from cloud storage services such as AWS S3

Artifactory as artefacts server

SCP, Rsync, FTP, and SSL counterparts

Via shared storage

File transfer with cloud storage services such as AWS S3

Code pushing using system-level file transfer tools.

Scripting using SSH libraries such as Paramiko.

Orchestrating code pushes using configuration management tools.

Use of crontab.

Running jobs in the background; use of Nohup.

Use of screen to launch long-running jobs.

Jenkins as a process manager.

Typical uses of the find, DF, DU, etc.

A comparison of popular distributions.

Checking OS release and system info.

Package management differences.

OS Internals and Commands

Typical uses of SED, AWK, GREP, TR, etc.

Scripting using Perl, Python.

Regular expressions.

Support for regular expressions in Perl and Python.

Sample usages and steps to install these tools:

NC

Netstat

Traceroute

VMStat

LSOF

Top

NSLookup

Ping

TCPDump

Dig

Sar

Uptime

IFConfig

Route

One of the attributes that helps differentiate a DevOps engineer from other members in the operations team, like sysadmins, DBAs, and operations support staff, is his or her ability to write code. The coding and scripting skill is just one of the tools in the DevOps toolbox, but it's a powerful one that a DevOps engineer would maintain as part of practising his or her trade.

Coding is the last resort when things cannot be integrated by configuring and tweaking the applications and tools that are used in an automation project.

Many times, a few lines of bash script could be the best glue code integrating two components in the whole software system. DevOps engineer should have basic shell scripting skills and Bash is the most popular right now.

If a script has to deal with external systems and components or it's more than just a few lines of command-lines and dealing with fairly complex logic, it might be better to write that script in an advanced scripting language like Python, Perl, or Ruby.

Knowledge of Python would make your life easier when dealing with DevOps applications such as Ansible, which uses Python syntax to define data structures and implement conditionals for defining configurations.

One of the categories of projects a DevOps engineer would end up doing is building dashboards. Though dashboarding features are found with most of the DevOps tools, those are specific to the application, and there will be a time when you may require to have a general-purpose dashboard with more dynamic content than just static links and text.

Another requirement is to build web UI for provisioning tools to present those as self-service tools to user groups.

In both these cases, deep web programming skills are not required. Knowledge of a web programming friendly language such as PHP and a JavaScript/CSS/HTML library like Composer would be enough to get things started. It is also important for the DevOps engineer to know the full-stack, in this case, LAMP, for building and running the web apps.

Almost every application and tool that is used for building, deploying, and maintaining software systems use configuration files. While manual reading of these files might not require any expertise, a DevOps engineer should know how config files in such formats are created and parsed programmatically.

A DevOps engineer should have a good understanding of these formats:

INI.

XML.

JSON.

YAML.

The engineer should also know how these formats are parsed in his/her favourite scripting language.

The wide acceptance of REST API as a standard to expose features that other applications can use for system integration made it a feature requirement for any application that wants to be taken seriously. The knowledge of using REST API has become an important skill for DevOps engineer.

HTTP/HTTPS: REST APIs are based on HTTP/HTTPS protocol and a solid understanding of its working is required. Knowledge of HTTP headers, status codes, and main verbs GET, POST, and PUT.

REST API basics: Normal layout of APIs defined for an application.

Curl and Wget: Command-line tools to access REST API and HTTP URLs. Some knowledge of the support available for HTTP protocol in scripting languages will be useful and that would be an indication of working with REST APIs.

Authentication methods: Cookie-based and OAuth authentication; API keys; use of If-Match and If-None-Match set of HTTP headers for updates.

API management tools: If the application you support provides an API for the users, most probably, its usage will be managed by some API Gateway tool. Though not an essential skill, experience in this area would be good if one works on the API provider side.

There was a time when mere knowledge of programming with RDBMS was enough for an application developer and system integrator to manage application data. Now with the wide adoption of Big Data platform like Hadoop and NOSQL systems to process and store data, a DevOps engineer needs varied requirements, from one project to another. Core skills are the following:

RDBMS: MySQL, Postgres, etc. knowledge of one or more is important.

Setting up and configuring PostGres: As an open-source database used with many other tools in the DevOps toolchain, consider this as a basic requirement for a DevOps engineer. If one hasn’t done this, he or she might not have done enough yet.

Running queries from a Bash script: How to run a database query via a database client from a Bash script and use the output. MySQL is a good example.

Database access from Perl/PHP/Python: All the major scripting languages provide modules to access databases and that can be used to write robust automation scripts. Examples are Perl DBI and Python’s MySQLdb module.

DB Backups: Migration, Logging, monitoring and cleanup.

Those who have built cloud infrastructure with a focus on automation and versioning should know some of these (or similar) tools:

cloud-init: Cloud-init can be used to configure a virtual machine when it is spun up. This is very useful when a node is spun up from a machine image with baseline or even application software already baked in.

AWS/Azure/GCloud CLI: If the application runs on Commercial cloud, knowledge of CLI is needed, which would be handy to put together simple automation scripts.

Terraform: HashiCorp’s Terraform is an important tool if the focus would be to provision infrastructure as code (IaaS). Using this, infrastructure can be configured independently of the target cloud or virtualization platform.

Ansible: It can be used to build machine images for a variety of virtualization technologies and cloud platforms, it is useful if the infrastructure is provisioned in a mixed or hybrid cloud environment.

In a rush to get things rolled out, one of the things left half-done is adding enough error handling in scripts. Automation scripts that are not robust can cause major production issues, which could impact the credibility of DevOps efforts itself. A DevOps engineer should be aware of the following best practices in error handling and logging:

The importance of error handling in automated scripts.

Error handling in Bash.

Error handling in Python.

Logging errors in application and system logs.

All content on this page by eGov Foundation is licensed under a Creative Commons Attribution 4.0 International License.

Gateway (Zuul, nginx-ingress-controller)

Understanding of VM Instances, LoadBalancers, SecurityGroups/Firewalls, nginx, DB Instance, Data Volumes.

Experience of Kubernetes, Docker, Jenkins, helm, golang, Infra-as-code.

Deploy configuration and deployment backbone services:

Clone the git repo https://github.com/egovernments/eGov-infraOps . Copy existing dev.yaml and dev-secrets.yaml with new environment name (eg..yaml and-secrets.yaml)

Modify the global domain and set namespaces create to true

Modify the below-mentioned changes for each backbone services:

Eg. For Kafka-v2 If you are using AWS as cloud provider, change the respective volume id’s and zone’s

(You will get the volume id’s and zone details from either remote state bucket or from AWS portal)

Eg. Kafka-v2 If you are using Azure cloud provider, change the diskName and diskUri

(You will get the volume id’s and zone details from either remote state bucket or from Azure portal)

Eg. Kafka-v2 If you are using ISCSI , change the targetPortal and iqn.

Deploy the backbone services using go command

Modify the “dev” environment name with your respective environment name.

Flags:

e --- Environment name

p --- Print the manifest

c --- Enable Cluster Configs

Check the Status of pods

All content on this page by eGov Foundation is licensed under a Creative Commons Attribution 4.0 International License.

Before you proceed with the configuration, make sure the following pre-requisites are met -

Prior Knowledge of Java/J2EE.

Prior Knowledge of Spring Boot.

Prior Knowledge of REST APIs and related concepts like path parameters, headers, JSON, etc.

Prior knowledge of Git.

Advanced knowledge of how to operate JSON data would be an added advantage to understand the service.

State Level Masters are maintained in a common folder.

ULB Level Masters are maintained in separate folders named after the ULB.

Module Specific State Level Masters are maintained by a folder named after the specific module that is placed outside the common folder.

For deploying the changes(adding new data, updating existing data or deletion) in MDMS, the MDMS service needs to be restarted.

The common master data across all ULBs and modules like department, designation, etc are placed under the common-masters folder which is under the tenant folder of the MDMS repository.

ex: egov-mdms-data/data/pb/common-masters/ Here “pb” is the tenant folder name.

The common master data across all ULBs and are module-specific are placed in a folder named after each module. These folders are placed directly under the tenant folder.

ex: egov-mdms-data/data/pb/TradeLicense/ Here “pb” is the tenant folder name and “TradeLicense“ is the module name.

Module data that are specific to each ULB like boundary data, interest, penalty, etc are configured at the ULB level. There will be a folder per ULB under the tenant folder and all the ULB’s module-specific data are placed under this folder.

ex: egov-mdms-data/data/pb/amritsar/TradeLicense/ Here “amritsar“ is the ULB name and “TradeLicense“ is the module name. All the data specific to this module for the ULB are configured inside this folder.

State Level Common-Master Data

State Level Module Specific Common-Master Data

ULB Specific Data

API Contract Reference

All content on this page by eGov Foundation is licensed under a Creative Commons Attribution 4.0 International License.

Feature

Description

Citizen Profile

Citizens can now edit name, email, passwords etc using their profile details section

Employee Profile

Employees can now edit names, email, passwords etc using their profile details section

Employee City Switch

Employees will be able to switch between multiple tenants that are mapped to his/her profile.

Common UI/UX: Common UI/UX

Document Title

Document Link

Citizen Profile

Employee Profile

Employee City Change

Not Applicable

Not Applicable

Not Applicable

Not Applicable

Not Applicable

Not Applicable

All content on this page by eGov Foundation is licensed under a Creative Commons Attribution 4.0 International License.

Generate bills

Search bills

Update bills

ULB Level

None

All content on this page by eGov Foundation is licensed under a Creative Commons Attribution 4.0 International License.

Report issues via the eGov Opensource JIRA.

The eGov suit is released under version 3.0 of the GPL.

All content on this page by eGov Foundation is licensed under a Creative Commons Attribution 4.0 International License.

Kubernetes has changed the way organizations deploy and run their applications, and it has created a significant shift in mindsets. While it has already gained a lot of popularity and more and more organizations are embracing the change, running Kubernetes in production requires care.

Although Kubernetes is open source and does it have its share of vulnerabilities, making the right architectural decision can prevent a disaster from happening.

You need to have a deep level of understanding of how Kubernetes works and how to enforce the best practices so that you can run a secure, highly available, production-ready Kubernetes cluster.

Although Kubernetes is a robust container orchestration platform, the sheer level of complexity with multiple moving parts overwhelms all administrators.

That is the reason why Kubernetes has a large attack surface, and, therefore, hardening of the cluster is an absolute must if you are to run Kubernetes in production.

There are a massive number of configurations in K8s, and while you can configure a few things correctly, the chances are that you might misconfigure a few things.

I will describe a few best practices that you can adopt if you are running Kubernetes in production. Let’s find out.

If you are running your Kubernetes cluster in the cloud, consider using a managed Kubernetes cluster such as or .

A managed cluster comes with some level of hardening already in place, and, therefore, there are fewer chances to misconfigure things. A managed cluster also makes upgrades easy, and sometimes automatic. It helps you manage your cluster with ease and provides monitoring and alerting out of the box.

Since Kubernetes is open source, vulnerabilities appear quickly and security patches are released regularly. You need to ensure that your cluster is up to date with the latest security patches and for that, add an upgrade schedule in your standard operating procedure.

Having a CI/CD pipeline that runs periodically for executing rolling updates for your cluster is a plus. You would not need to check for upgrades manually, and rolling updates would cause minimal disruption and downtime; also, there would be fewer chances to make mistakes.

That would make upgrades less of a pain. If you are using a managed Kubernetes cluster, your cloud provider can cover this aspect for you.

It goes without saying that you should patch and harden the operating system of your Kubernetes nodes. This would ensure that an attacker would have the least attack surface possible.

You should upgrade your OS regularly and ensure that it is up to date.

Kubernetes post version 1.6 has role-based access control (RBAC) enabled by default. Ensure that your cluster has this enabled.

You also need to ensure that legacy attribute-based access control (ABAC) is disabled. Enforcing RBAC gives you several advantages as you can now control who can access your cluster and ensure that the right people have the right set of permissions.

RBAC does not end with securing access to the cluster by Kubectl clients but also by pods running within the cluster, nodes, proxies, scheduler, and volume plugins.

Only provide the required access to service accounts and ensure that the API server authenticates and authorizes them every time they make a request.

Running your API server on plain HTTP in production is a terrible idea. It opens your cluster to a man in the middle attack and would open up multiple security holes.

Always use transport layer security (TLS) to ensure that communication between Kubectl clients and the API server is secure and encrypted.

Be aware of any non-TLS ports you expose for managing your cluster. Also ensure that internal clients such as pods running within the cluster, nodes, proxies, scheduler, and volume plugins use TLS to interact with the API server.

While it might be tempting to create all resources within your default namespace, it would give you tons of advantages if you use namespaces. Not only will it be able to segregate your resources in logical groups but it will also enable you to define security boundaries to resources in namespaces.

Namespaces logically behave as a separate cluster within Kubernetes. You might want to create namespaces based on teams, or based on the type of resources, projects, or customers depending on your use case.

After that, you can do clever stuff like defining resource quotas, limit ranges, user permissions, and RBAC on the namespace layer.

Avoid binding ClusterRoles to users and service accounts, instead provide them namespace roles so that users have access only to their namespace and do not unintentionally misconfigure someone else’s resources.

Cluster Role and Namespace Role Bindings

You can use Kubernetes network policies that work as firewalls within your cluster. That would ensure that an attacker who gained access to a pod (especially the ones exposed externally) would not be able to access other pods from it.

You can create Ingress and Egress rules to allow traffic from the desired source to the desired target and deny everything else.

Kubernetes Network Policy

By default, when you boot your cluster through , you get access to the kubernetes-admin config file which is the superuser for performing all activities within your cluster.

Do not share this file within your team, instead, create a separate user account for every user and only provide the right accesses to them. Bear in mind that Kubernetes does not maintain an internal user directory, and therefore, you need to ensure that you have the right solution in place to create and manage your users.

Once you create the user, you can generate a private key and a certificate signing request for the user, and Kubernetes would sign and generate a CA cert for the user.

You can then securely share the CA certificate with the user. The user can then use the certificate within kubectl to authenticate with the API server securely.

Configuring User Accounts

You can provide granular access to user and service accounts with RBAC. Let us consider a typical organization where you can have multiple roles, such as:

Application developers — These need access only to a namespace and not the entire cluster. Ensure that you provide them with access only to deploy their applications and troubleshoot them only within their namespace. You might want application developers with access to spin only ClusterIP services and might wish to grant permissions only to network administrators to define ingresses for them.

Network administrators — You can provide network admins access to networking features such as ingresses, and privileges to spin up external services.

Cluster administrators — These are sysadmins whose main job is to administer the entire cluster. These are the only people that should have cluster-admin access and only the amount that is necessary for them to do their roles.

The above is not etched in stone, and you can have a different organization policy and different roles, but the only thing to keep in mind here is that you need to enforce the principle of least privilege.

That means that individuals and teams should have only the right amount of access they need to perform their job, nothing less and nothing more.

It does not stop with just issuing separate user accounts and using TLS to authenticate with the API server. It is an absolute must that you frequently rotate and issue credentials to your users.

Set up an automated system that periodically revokes the old TLS certificates and issues new ones to your user. That helps as you don’t want attackers to get hold of a TLS cert or a token and then make use of it indefinitely.

A bootstrap token, for example, needs to be revoked as soon as you finish with your activity. You can also make use of a credential management system such as which can issue you with credentials when you need them and revoke them when you finish with your work.

Imagine a scenario where an externally exposed web application is compromised, and someone has gained access to the pod. In that scenario, they would be able to access the secrets (such as private keys) and target the entire system.