Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Here are the test cases for Program Service, Digit Exchange, Mukta-ifix-adapter.

Solution design architecture

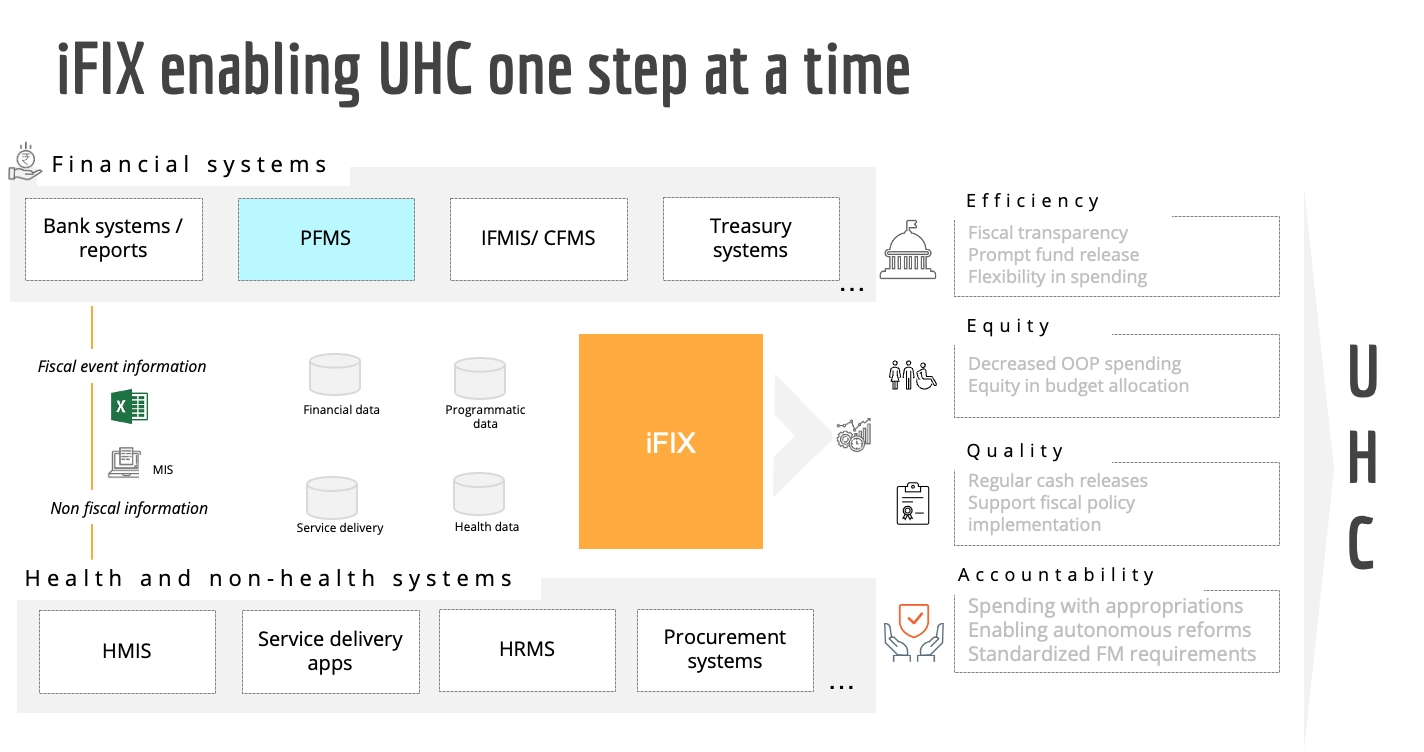

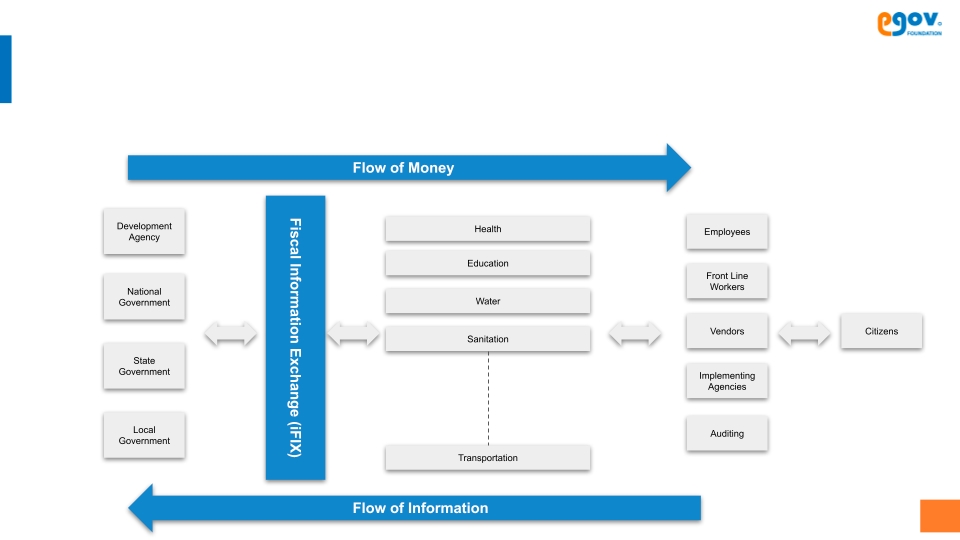

The Fiscal Information Exchange (iFIX) platform is an open-source solution designed to standardize and streamline the exchange of fiscal data among various government agencies, funding bodies, and implementing organizations. Built on the DIGIT platform, iFIX facilitates real-time, seamless communication of financial information, enhancing transparency, efficiency, and accountability in public finance management.

Key Architecture Highlights

Distributed Reference Data Management: Each department or agency can host its own instance of the iFIX platform, maintaining autonomy over its fiscal data while adhering to standardized protocols for data exchange. This distributed approach ensures that departments manage their own data, reducing bottlenecks and enhancing data accuracy.

Microservices-Based Architecture: Leveraging a microservices design, iFIX ensures that each component operates independently, promoting scalability, flexibility, and ease of maintenance. This modular approach allows for the seamless addition or modification of services without disrupting the entire system.

Transforming and leveraging fiscal service events

Public finance management, or PFM, is how governments manage public expenses and revenues. It is a crucial means of ensuring the effective allocation and utilisation of public resources. The objective of a robust PFM system is to enhance fiscal transparency, resulting in improved accountability and effective resource allocation. Such a system strengthens the government's ability to deliver essential public services and maintain economic stability, leading to long-term benefits in public welfare and economic growth outcomes.

iFIX or integrated Fiscal Exchange solution is designed to address the challenges facing public finance management. iFIX is a set of open-source specifications that establishes a standardised language for finance and line departments to interoperate. iFIX is a digital public infrastructure (DPI) that aims to facilitate seamless electronic information exchange for effective digital public financial management. The solution enables connected applications to exchange standardised fiscal events e.g. Demand, Receipts, Bills, and Payments. The fiscal event consists of attributes explaining the details of why, who, what, where and when it happened.

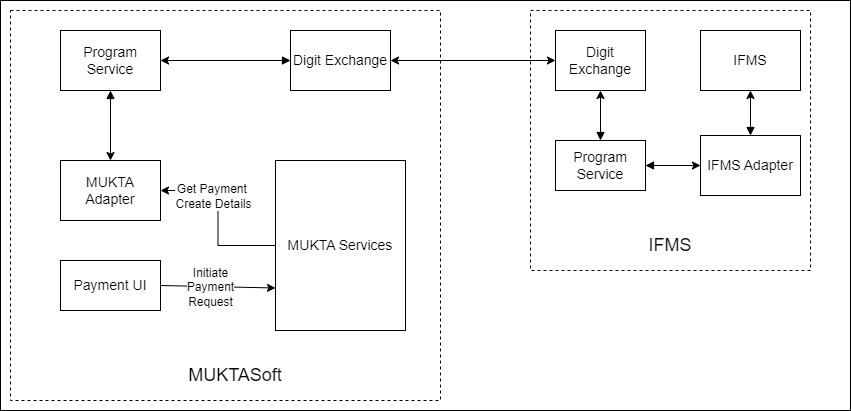

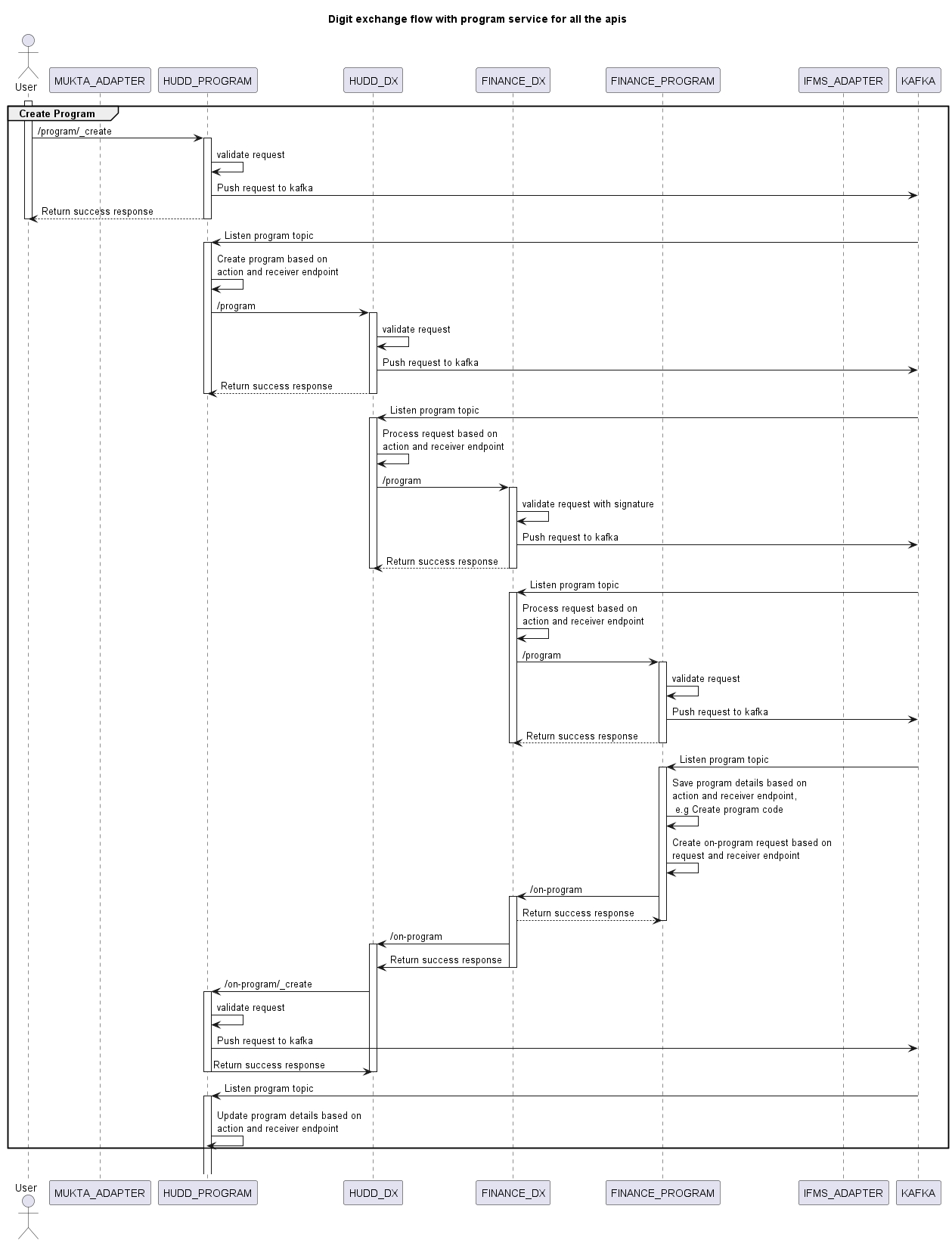

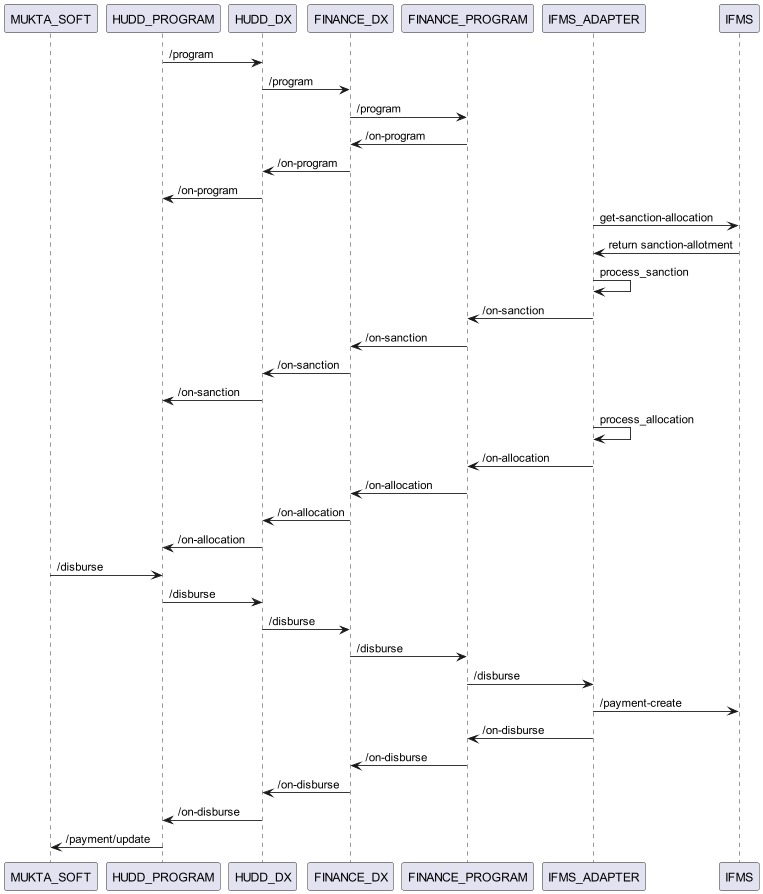

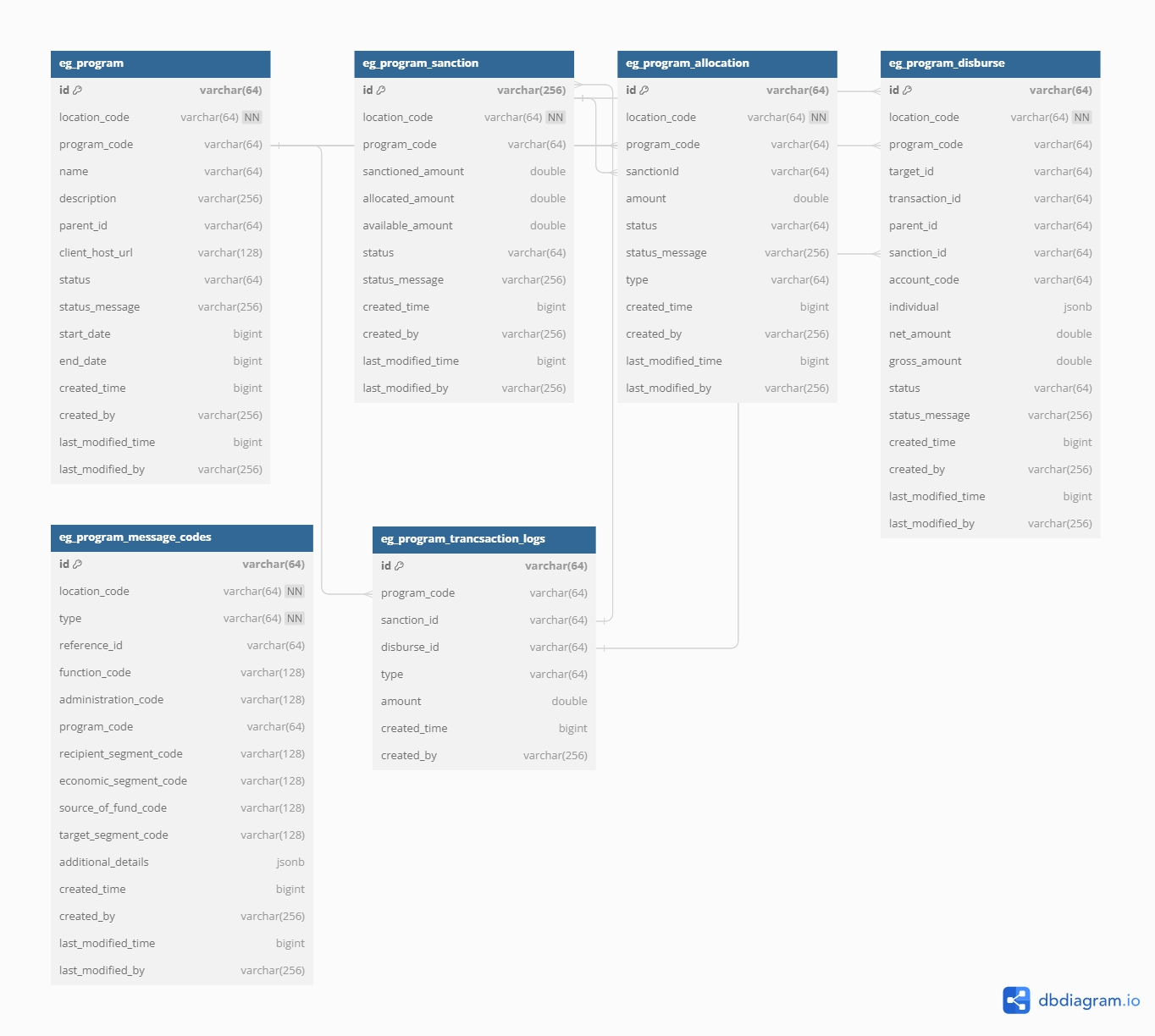

Program service handles all the financial transactions like sanction management, fund allocation, and disbursement of funds.

Users can establish programs within which these transactions take place. It receives messages from the adapter service, validates them and forwards them to digit-exchange for sending it to the IFMS system. In case of any validation failure, it responds with an error status and message. Additionally, the service maintains records of sanctioned, allocated, and available amounts for disbursement.

Delivering frictionless and timely payments under the MUKTA Scheme

MUKTASoft is an exemplar built on the Works platform. MUKTASoft aims to improve the overall scheme efficiency of MUKTA by identifying & providing equal job opportunities to the urban poor, constructing environment-friendly projects, developing local communities and slums & planning better in the upcoming years.

Click the link below to explore the application features, configuration details and user guides.

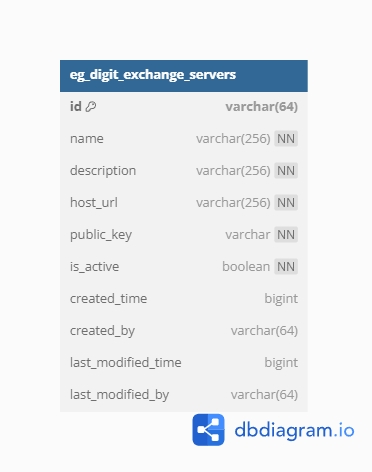

DIGIT Exchange - This service offers the capability to exchange data while ensuring it is signed.

Program Service - This facilitates the creation of programs, sanctions, allocations, and disbursements.

MUKTA iFIX Adapter - This transforms data from Mukta payment to program disbursement.

IFMS Adapter - Integration to program disburse and on-disburse APIs.

NA

DIGIT Exchange

This service offers the capability to exchange data while ensuring it is signed.

Program

This facilitates the creation of programs, sanctions, allocations, and disbursements.

MUKTA iFIX Adapter

This transforms data from Mukta payment to program disbursement.

IFMS Adapter

Integration to program disburse and on-disburse APIs.

If Kafka malfunctions, data will be directed to the error queue, necessitating manual processing until an error queue handler is developed.

If the disbursement status is set to be SUCCESSFUL then it should not be changed to INITIATED again for the disbursement id.

As per IFMS guidelines, if a transaction fails, the amount is deducted and if a revised payment is not generated within 90 days, the amount should be deducted again.

Establishing alert mechanisms for critical errors, particularly in the context of billing, is required.

Performance testing and benchmarking of services.

Public Finance involves the efficient management of public resources, ensuring transparency, accountability, and the effective use of funds. The iFIX solution offers multiple public finance use cases listed below:

Revenue collection - The solution offers features designed to streamline the collection of various municipal taxes including property tax, water charges, trade license fees, miscellaneous user charges and fee collection. mGramSeva launched by the Punjab government exemplifies the digitalisation of revenue and expenditure management at the rural governance level.

Benefit deliveries - The iFIX solution is capable of managing programs designed for social or economic benefits, wage disbursal, empowering Self-Help Groups or SHGs, and activities linked to community building or development. MUKTASoft is one such program launched by the Orissa government to employ the urban poor through various schemes.

Fund transfer and payments - Another important use case involves managing cash flow between different government departments, ministries, and projects, ensuring timely payments. The solution offers the scope to utilise the Just In Time (JIT) funding approach to ensure funds are disbursed only when required. The results are visible in improved fiscal discipline, better accountability, and a streamlined fiscal approach leading to better governance outcomes.

Procurement and contract management - iFIX enables the implementation of procurement policies that ensure competitive bidding and streamline the disbursal and utilisation of public funds. It also supports features to manage contracts with private vendors for government projects to ensure timely and cost-effective completion.

Budgeting and expenditure management - The iFIX solution offers the capability to prepare project estimates, forecast financials, and manage resource allocation details.

iFix Infra Setup & Deployment

Follow our blog section to get insights on public finance management systems, innovations, and impact.

Core service configuration and promotion docs

Seamless Integration with External Systems: The platform employs adapters to connect with various external agency systems, facilitating the smooth transmission and reception of fiscal data. These adapters ensure compatibility with existing systems, minimizing the need for extensive overhauls.

Enhanced Security and Reliability: iFIX incorporates multiple layers of security—ensuring data integrity, authentication, and secure data transmission—while its redundant design and failover mechanisms guarantee continuous operation in mission-critical environments.

Flexible Deployment Options: Whether deployed on-premises, within a private cloud, or via a hybrid model, iFIX is designed to meet various infrastructure and performance requirements. This flexibility allows organizations to choose deployment strategies that align with their technical capabilities and policy requirements.

iFIX adopts a multi-layered, distributed architecture pattern, ensuring flexibility, maintainability, and scalability. Each layer comprises a set of microservices with specific roles and responsibilities, facilitating independent deployment, maintenance, and updates. This design enhances interoperability and allows for parallel development and testing.

Flexibility and Maintainability: Components can be updated or replaced independently, reducing system downtime and simplifying maintenance.

Scalability: Each layer can be scaled independently to accommodate increasing loads or additional functionalities.

Reusability: Components can be reused across different applications, promoting consistency and reducing development time.

Parallel Development: Teams can work on different layers simultaneously, accelerating development and deployment cycles.

Security: Different security levels can be configured for each layer, enhancing overall system security.

Independent Testing: Components can be tested independently, ensuring robustness and reliability before integration.

This multi-layer architecture ensures that iFIX serves as a robust and adaptable platform for modernizing public finance management, aligning with strategic goals of transparency, efficiency, and accountability.

DIGIT Exchange

MDMS Service

IdGen Service

Creates Program for enabling further financial transactions.

Creates on-sanction when a sanction is received from the IFMS system. Maintains allocated and available amount for disbursal for a particular sanction. Forwards the sanction to the client-server.

Creates on-allocation when allocations are received from the IFMS system. Updates the allocated and available amount for the given sanction. Forwards the allocation to the client-server.

Creates disbursement, deducts available amount and forwards it to the IFMS for disbursement. On failure increases the available amount in sanction.

/program-service/

In Odisha, a lack of reliable and timely fiscal data led to delayed payments under an Urban Employment Scheme.

The integration facilitates the electronic exchange of fiscal and service data. The outcome is evident in enhanced trust and reliability in data exchange processes.

The IFMS-iFIX integration streamlines financial operations by enabling Smart Contracting and Smart Payments, offering significant benefits:

Timely Payouts

Facilitates quick and accurate payments to Wage Seekers and Community-Based Organizations (CBOs), ensuring no delays in disbursing funds.

Functional Services for Citizens

Ensures that essential services are maintained without disruption, contributing to improved citizen satisfaction and governance.

Better Fund Management

Implements Just-in-Time Payments, allowing the Finance Department to optimize cash flow, reduce idle funds, and ensure resources are available when needed.

This integration bridges the gap between financial planning and execution, making payments more transparent, efficient, and aligned with service delivery goals.

Before the iFIX platform can be used, the master data must be configured directly into the respective collections.

(only if indexer service is used)

With the iFIX v2.4-alpha update, some of the DIGIT core services also need to be deployed. The builds of the same are also listed below. These have been picked from DIGIT-v2.8.

The iFIX platform has demonstrated its transformative potential through multiple reference applications designed to address critical challenges in public finance management (PFM). These applications, or exemplars, enhance fiscal management capabilities by providing real-time visibility and resolving inefficiencies in project-related financial processes.

In - the mGramSeva exemplar streamlines the information exchange across multiple agencies, fostering improved coordination and efficiency.

- faced significant challenges in implementing schemes, particularly delayed payments to Community-Based Organizations (CBOs) and wage seekers. The delays primarily stemmed from labour-intensive, paper-based compliance, billing, and verification processes. MUKTASoft, an iFIX-based exemplar, aims to digitize and streamline the end-to-end process, enhancing efficiency, transparency, and timely payments.

A digital solution for governing bodies to manage public finance.

The iFIX solution provides a standardized framework allowing central governance bodies, state finance departments, and private institutions to institutionalize fiscal events, ensuring interoperability across multiple government tiers.

Onboard state or federal units and create their instances for streamlined management of public finance initiatives.

Access to real-time data on the system results in effective and faster decision-making.

Technology tools used for the solution design

Java 17 ()

Apache Kafka ()

Postgres ()

Establish benchmarks for efficient implementation and improvement in service delivery.

Integrate the iFIX solution to expand capabilities in managing fiscal events at various levels, and reduce processing time and administrative burden linked to public finance transactions.

Develop new models that can address various concerns, including -

fiscal discipline and sustainability at all levels of government,

effective resourcing for responding to emergencies and disasters,

optimal strategies for raising credit/debt by the government,

effective plans for improvements in the efficiency of public goods creation/maintenance and delivery of public services

factors in making direct benefits more inclusive

These exemplars showcase the versatility and impact of the iFIX platform in transforming public finance management for improved governance and service delivery.

Solution design principles

The iFIX solution design is built as a Digital Public Good and follows a key set of design principles listed below.

Single Source of Truth - Data resides in multiple systems across departments and getting an integrated, consistent and disaggregated view of data is imperative.

Federated - Central, State and Local Governments represent the federated structure of government. This federated structure must be considered when designing systems that enable intergovernmental information exchange.

Unbundled - Break down complex systems into smaller manageable and reusable parts that are evolvable and scale independently.

Minimum - Minimum data attributes that have a well-defined purpose is mandatory; everything else is optional.

Privacy and Security - Ensuring the privacy of citizens, employees and users while ensuring the system's security.

Performance at Scale - The system is designed to perform at scale.

Open Standards - The system is based on open standards, making it easy to discover, comprehend, integrate, operate and evolve.

This is new service for implemented with iFix specs.

Adapters

ifms-adapter

Connects iFix to IFMS system.

iFix-mukta-adapter

Transform works plateform bills to disbursement

Core Services

egov-mdms-service

egov-mdms-service:v1.3.2-44558a0-3

egov-indexer

egov-indexer:v1.1.7-f52184e6ba-25

egov-idgen

egov-idgen:v1.2.3-72f8a8f87b-7

IFIX Domain Services

Digit Exchange

digit-exchange:develop-1b9d9a2-23This is new service for exchange data

Program Service

The DIGIT Exchange Service is a robust data interchange platform designed to facilitate the seamless and secure exchange of digital information between two endpoints. Built with a fixed schema for headers and dynamic messaging capabilities, this service ensures reliable communication while prioritizing data integrity and confidentiality.

DIGIT-exchange can implement any service if it has the same request structure as the program.

Base Path: /digit-exchange/

API spec Click below to view it in Swagger Editor.

After installation of all required services, port-forward the program service and create programs for each ULB. A sample curl is added below.

Configure all program codes that you created for each ULB to the MDMS.

IFMS adapter data migration for mukta-adapter ad program service

Use specific branch for iFix migration.

Update environment variables according to the environment.

Build the Python migration script and deploy it in the environment.

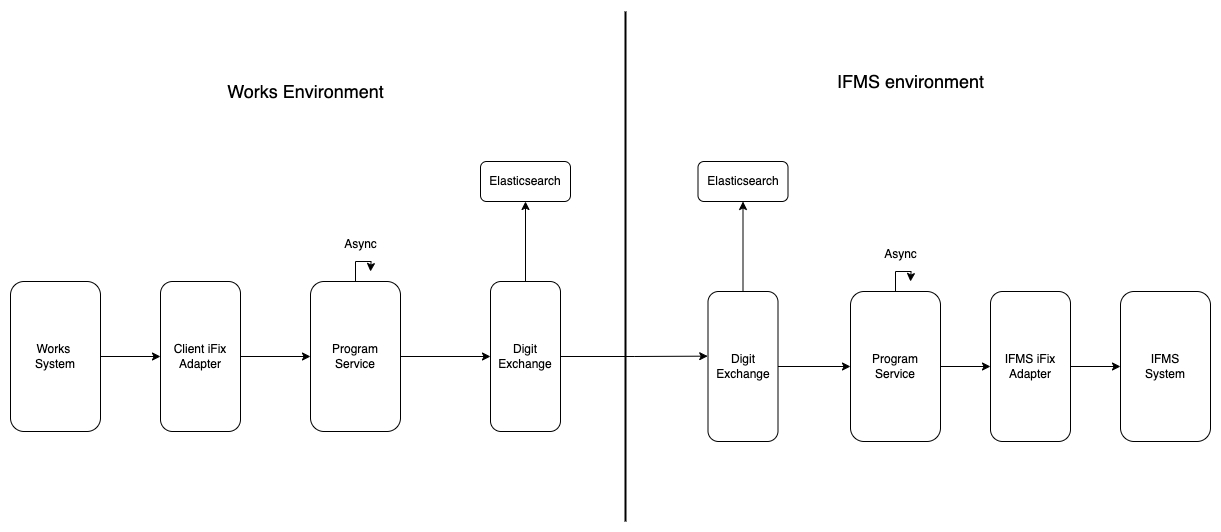

DIGIT Exchange functions as a connector bridging services deployed across diverse domains. Its primary role involves signing and verifying exchange messages and generating events for ingestion into Elasticsearch for dashboard visualisation.

Signs the exchange messages and sends them to respective systems according to receiver ID and current domain.

Verifies data received from other domains, converts it to JSON object and forwards it to the program service.

Pushes the data to Kafka for dash-boarding and making the calls async.

In case of any exception, send a reply to the service that initiated the call.

/digit-exchange/

Delayed payments to CBOs and wage seekers were a key scheme implementation challenge. Beneficiaries faced lengthy wait times, with a baseline study across two pilot urban local bodies (ULBs) finding that over 50% of completed tasks were not processed for payment, and the rest encountered delays exceeding one month. Wage seekers come from low-income households, and such delays undermine the scheme’s welfare objectives.

Such delays arise largely from cumbersome, paper-based compliances, billing, and verification processes. Every step – from attendance tracking and bill submission to verification, approvals, and payment instructions – relied on manual processes. This increases the administrative burden on local government personnel, who are often already overburdened. These long-drawn processes also resulted in underutilisation of sanctioned funding, with funds parked idle in banks and limited transparency.

Objective: Launched in April 2020 by HUDD Odisha

The scheme aims to generate sustainable livelihoods for the urban poor, while creating and maintaining climate-resilient community assets, nurturing inclusive and equitable urban development.

Community-Centric Implementation: Bottom-up scheme design ensures community participation in identifying and prioritising public works.

iFIX specification details

iFIX Adapters & Dashboards is a fiscal data exchange solution that enables the exchange of standardized fiscal data between various agencies. iFIX is designed to enable the exchange of fiscal data between various agencies and ensure the visibility of fiscal data. iFIX makes it possible to chain the fiscal data with each other and establish a chain of custody for the entire lifecycle from budgeting to accounting.

From the iFIX perspective, there are two types of agencies

The Fiscal Information Exchange (iFIX) platform employs a hexagonal architecture, also known as the Ports and Adapters pattern, to facilitate seamless and standardized fiscal data exchange among various government entities. This architectural approach ensures that the core business logic remains isolated from external systems and technologies, enhancing flexibility and maintainability.

Key Components of iFIX Architecture

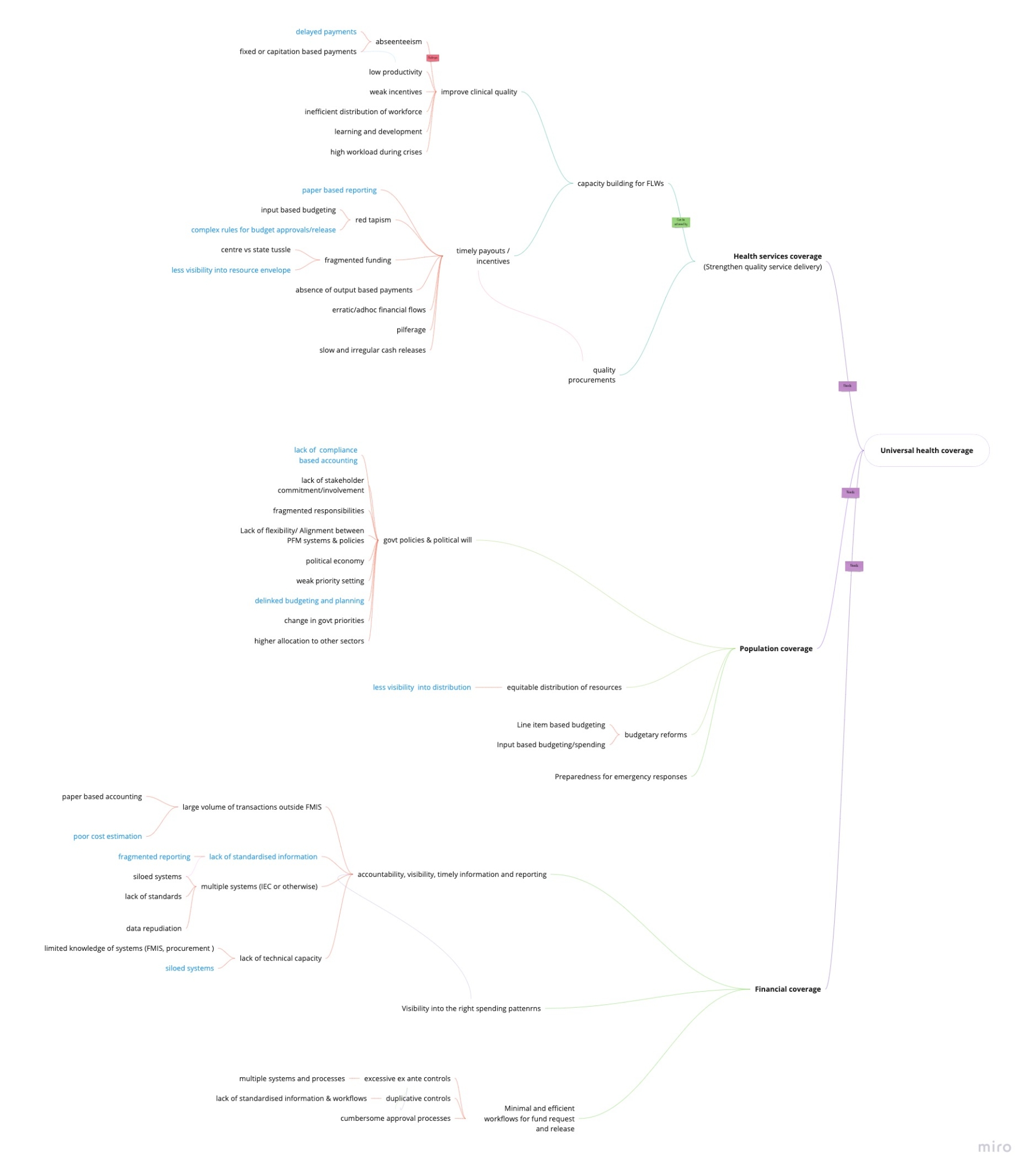

Public finance management is becoming an increasingly complex discipline owing to the compounding effects of socio-economic, technological, and institutional factors. The key challenge stems from the need to manage public funds in a transparent, efficient and accountable manner.

The iFIX helps address the following challenges linked to the information on government fiscal programmes -

Unlocking fiscal and operational data locked in silos

Check out the coverage of news and events linked to the PFM platform

Punjab to build first of its kind fiscal information exchange platform

Punjab villages are now utilizing the mGramSeva app to conveniently pay their water bills. Developed on the DIGIT platform, mGramSeva is a mobile application specifically designed to handle the collection and management of revenue and expenditures.

curl --location 'http://localhost:8082/program-service/v1/program/_create' \

--header 'Content-Type: application/json' \

--data-raw '{

"signature": null,

"header": {

"message_id": "123",

"message_ts": "1708428280",

"message_type": "program",

"action": "create",

"sender_id": "program@https://unified-dev.digit.org",

"receiver_id": "program@https://unified-qa.digit.org"

},

"message": {

"location_code": "pg.citya",

"name": "ifix",

"description": "Empowering local communities through sustainable development projects.",

"start_date": 1672531200,

"end_date": 1704067200,

"children":null,

"status": {

"status_code": "INITIATED",

"status_message": "ACTIVE"

},

"additional_details": {},

"function_code": "in.pg.OGES",

"administration_code": "in.pg.ac.HUDD.UID",

"recipient_segment_code": "in.pg.rsc",

"economic_segment_code": "in.pg.CE.IA.OC",

"source_of_fund_code": "in.pg.CSS",

"target_segment_code": null,

"currency_code": "INR",

"locale_code": "in.pg.citya"

}

}'Port forward the service and call the API to start the migration.

Beneficiaries: Wage seekers, transgenders and women from Self-Help Groups are key recipients of the scheme’s benefits.

program-service:develop-92a135f-55ifms-adapter:develop-bd05fc83-113

mukta-ifix-adapter:develop-4799181a-46Fiscal Data Consumer - can query the fiscal data.

Both these roles are interchangeable.

Providers and consumers need to register on iFIX before they can post or query fiscal data. To register the concerned person from the agency must be provided with the following information on the iFIX portal - Name of the Agency, Contact Person, Name, Contact Person’s Phone Number, and Contact Person’s Official Email Address. The OTP is sent to the registered email address of the person.

Registered users -

logs in to the iFIX portal using the official email address

registers one or more systems as a provider, consumer or both

provides the name of the system

a unique ID is generated for each system (example: [email protected])

a secret API key is also generated for each system - use this key to post or query fiscal data

The API key can be regenerated if required - only one API key is active at a given point in time

The portal provides the ability to generate new keys for each system.

Fiscal data providers post the fiscal data in two ways.

A fiscal message - is directed to a specific consumer and is delivered to intended consumers. These messages are available for query by intended consumers only.

A fiscal event - iFIX stores the events for consumers to query

Fiscal Event consists of

Header

From

To

Date of Posting

Body

Fiscal Event Type e.g. Revenue, Expenditure, Debt

Fiscal Event Subtype

Revenue - Estimate, Plan, Demand, Receipt, Credit

Data providers can reverse a previous fiscal message or event. The data provider reverses the data by posting the same event with a negative amount in the line item(s). The data consumers should handle reversals appropriately.

Data consumers can query fiscal data. They can query Messages - the unread messages delivered to them. When consumers read the unread messages, these messages are marked as read. Events - Consumers can also query fiscal events posted by other data providers.

Location

Administrative

Chart of Account

Head Of Accounts

MDMS Service

ID Gen

Program Service

Exchange

Program Service

Exchange indexer

Digit Exchange

Exchange devops

Digit Exchange

ifms-pi-indexer

Mukta-ifix-Adapter

Charts

Program Service

Environment

Program Service

Secrets

Program Service

SSU Details

MDMS Service

DIGIT Exchange acts as the secure and trusted bridge between independently deployed systems that need to communicate fiscal data. It ensures data integrity, non-repudiation, and secure transmission between producers (like program services) and consumers (like IFMS or auditing tools).

Message Authentication & Signing: Ensures that all outgoing and incoming messages are cryptographically signed and verified, preventing tampering and ensuring authenticity.

Event Publishing: Each successfully exchanged message is logged as an event. These events are published to Kafka and pushed to Elasticsearch to support operational dashboards and real-time monitoring.

Interoperability Backbone: DIGIT Exchange makes it possible for different organizations or departments to operate independent systems while still collaborating and sharing data securely.

Audit Trail & Observability: Tracks and stores the flow of all messages to enable full traceability and debugging capabilities across the exchange network.

The Program Service is the domain-specific core service within iFIX that manages fiscal operations — including sanctions, allocations, and disbursements — under defined financial programs.

Key Features & Responsibilities:

Program Definition: Allows administrators to define “programs” — logical boundaries for budget and fund flow such as health campaigns, infrastructure projects, or welfare schemes.

Transaction Management: Each fiscal transaction (e.g., allocation or disbursement) is linked to a program and governed by its lifecycle.

Validation & Business Rules: All incoming messages from the adapter are validated against business rules. This ensures that transactions are complete, legitimate, and follow the proper hierarchy of approval.

Interaction with DIGIT Exchange: Valid messages are forwarded to DIGIT Exchange for further routing to external financial systems (e.g., IFMS). Invalid messages are rejected with precise error codes.

State Management: Maintains records of program-wise amounts sanctioned, allocated, committed, and available for disbursement — enabling accurate fiscal control and audit readiness.

Adapters are the integration glue of the iFIX ecosystem. They decouple the internal working of iFIX from the unique data formats, protocols, and workflows of external systems.

There are two types of adapters:

Producer Adapter: Sits on the side of a department or donor system that generates fiscal data.

Consumer Adapter: Placed at the system which is the recipient of fiscal data (e.g., an IFMS or treasury system).

Core Functions & Advantages:

Data Transformation & Mapping: Converts messages between iFIX’s standardized fiscal event format and the target system’s native format (e.g., XML, JSON, SOAP). This ensures seamless compatibility regardless of the external system’s design.

System-Agnostic Integration: Adapters can be easily configured or extended to integrate iFIX with any kind of system, including legacy platforms, ERP solutions, MIS dashboards, or financial gateways.

Validation & Enrichment: Adapters can enrich messages (e.g., attaching metadata) or perform pre-validation before sending data to core services or external endpoints.

Reusability & Portability: Once an adapter is built for a particular system (like a state’s IFMS), it can be reused across other programs or departments, accelerating rollouts.

Loose Coupling: Adapters ensure that changes in external systems do not impact the core iFIX services, enabling independent evolution and easier upgrades.

Generating relevant information based on common data standards

Ensuring the information generated is credible, reliable and verifiable

Accelerating fund flows by providing trusted, usable data and reducing the interdepartmental coordination time

The existing public finance system applies multiple information channel flows that are unique and can be accessed by various sources. iFIX simplifies the network and fiscal information flows through standardised formats and protocols.

As government service delivery requires multiple actors and interactions to come together across different levels, visibility of information is critical to bring down the cost of coordination. This includes real-time information on the financial health of a government agency/department - expenditure, revenue and availability of funds.

Public finance management faces significant challenges in terms of promoting accountability and transparency. The problem is more on account of siloed information structures that restrict the scope to get a broader view of the flow of funds or data across agencies and stakeholders.

The information flow is slow and limited, resulting in several gaps and breaks in the PFM processes. This is essentially attributed to the lack of information standards and exchange mechanisms, making it difficult to achieve a seamless data flow across agencies.

iFIX allows departments to share fiscal information from existing systems without having to invest in multiple integrations for different stakeholder requirements. The solution plays a pivotal role in driving efficient and performance-driven financial planning across all levels of governance. Real-time availability of financial information to stakeholders facilitates data-driven deployment of public funds and policy-making. The solution design specification sets the base for the real-time exchange of fiscal information across funding and implementing agencies.

iFIX identifies and resolves Public Financial Management issues like delays in funds flow, floating of unutilised funds, problems of low data fidelity, the administrative burden of implementation and outcome-oriented funding using the platform approach and associated policy reforms.

The platform helps achieve overall fiscal discipline, allocation of resources to priority needs and efficient and effective allocation of public services. Public financial management includes all phases of the budget cycle, including the preparation of the budget, internal control and audit, procurement, monitoring and reporting arrangements, and external audit.

The standardised fiscal event and exchange mechanism enable various government agencies e.g. various departments, local governments, autonomous bodies, national government, and development agencies to -

exchange fiscal data much like email systems exchange data with each other

ease flow of fiscal information resulting in better planning, better execution, better accounting and better auditing thus transforming the entire PFM cycle

promote transparency and improve accountability while ensuring real-time access to the financial health of the government stakeholders

iFIX offers a solution that standardises fiscal event data and the capabilities to exchange information across multiple sources. It is built as a digital public good and is open source with minimal specifications. The design incorporates the security and privacy of all data and users and ensures performance at scale.

Data standards streamline the flow of information paving the way for timely exchange and cost-effective means of managing fiscal events. The iFIX solution establishes the standards and specifications for fiscal event data that make it easier to exchange fiscal information between funding and implementing agencies. Event data is captured in real-time and at the micro level which ensures there is no intentional or unintentional data loss. Any transaction triggers a fiscal event that is accessible to integrated agencies for necessary approvals.

The standardized data exchange approach ensures that iFIX is not a replacement for the existing finance system. It is just a coordination and visibility layer between agencies within government and across governments.

Dainik Bhaskar dated 02-06-2023

RozanaSpokesman dated 02-06-2023

Jag Bani dated 02-06-2023

The MUKTA iFIX Adapter service is designed to facilitate communication between the Expense Service and the Program Service. It acts as a mediator, listening for payment creation events from the Expense Service, enriching the payment request data, and generating disbursement requests. These disbursement requests are then sent to the Program Service for further processing.

Expense Service

Expense Calculator Service

MDMS Service

Bank Account Service

The creation of a disbursement request involves listening to payment creation events on a designated topic. When a payment is created, the adapter processes the event, extracts relevant information, and forwards the enriched disbursement request to the Program Service for further processing.

In case of a failure in the payment topic, we also have the option to manually create a disbursement using the adapter by providing the payment number.

We can search for the created disbursements.

After forwarding the disbursement to the program service, it undergoes sanction enrichment. Subsequently, it is forwarded to the Digit Exchange service, establishing a connection between two servers. Once a response is received from the IFMS system, the disbursement undergoes further enrichment and is sent back to the Mukta Adapter. The adapter then updates the payment status based on the statuses received in the disbursement.

/mukta-ifix-adapter/

Authors: Manish Srivastava and Prashanth Chandramouleeswaran

Over the last two decades, the evolving landscape of public financial management (PFM) has witnessed a remarkable transformation brought about by government agencies adopting technology to perform their duties. The State Finance Departments, through their Integrated Financial Management Information Systems/ Comprehensive Financial Management Systems (IFMIS/CFMS), have set the precedent for others to follow. These digital platforms have successfully harnessed vertical efficiency gains within government departments, leading to benefits in cash management, accounting, streamlining revenue, expenditure, and payments, and improved fiscal accountability.

As these systems become pervasive across the state a new aspiration for governance emerges: information exchange between government agencies across levels. The next wave of digital transformation hinges on the seamless exchange of data between different departments and government agencies, enabled by horizontal interoperability. The Finance Department, as the pioneer in this domain, is best positioned to anchor this transformation. The exchange of data between different government agencies holds immense potential with several key benefits such as:

Improved Coordination: Seamless data exchange fosters better coordination among various agencies, culminating in a truly unified "single window" experience for citizens and businesses. This integrated approach eliminates redundancies and enhances service delivery efficiency.

Enhanced Data Quality and Reuse: Efficient data sharing enables improved beneficiary identification and targeted benefit delivery. Agencies can collaboratively leverage data to ensure that government resources are directed precisely where they are needed, optimising social impact.

Strengthened Compliance Monitoring: Seamless data exchange empowers better compliance monitoring. Real-time access to accurate data enables authorities to make informed decisions and take prompt actions/ decisions.

Government implementing agencies are engaged majorly in service delivery, infrastructure development, and benefits distribution. The initial surge of digital transformation within these agencies was propelled by the implementation of departmental systems, which facilitated data digitisation and the automation of departmental processes. As they approach the next phase of their digital evolution it is necessary to empower these agencies to truly deliver on the ‘One Government’ experience. This transformation can be realised sooner by fostering enhanced coordination and collaboration among these agencies, culminating in the realisation of a unified experience for citizens and businesses.

The potential for augmenting governance outcomes is significantly magnified through seamless information exchange. This is where a standardised information exchange layer assumes a pivotal role, propelling service and fiscal event information electronically across agencies and levels in a disaggregated, real-time manner. This reliable conduit ensures comprehensive and timely insights for all stakeholders throughout the PFM cycle. Figure 1 illustrates the possibilities of connecting line departments, parastatals and other agencies seamlessly, without the need for multiple point-to-point integrations. The benefits of the exchange layer across the three key activities are summarised below:

Empowering Service Delivery: Unlocking Revenue and Efficiency

The challenges in responsive and functional service delivery are multifaceted, but the pivotal challenge is that the flow of information between funding and implementing agencies is slow and limited. As government service delivery requires multiple actors and interactions to come together across different levels, the exchange of information is critical to bring down the cost of coordination and improve operational effectiveness. This includes real-time information on the financial health of a government agency/department- expenditure, revenue and availability of funds.

An information exchange layer can set the base for the electronic and real-time flow of standardised disaggregated data. For example, imagine a scenario where a government agency is looking to augment its revenue by addressing revenue leakages. The information exchange layer can allow relevant departments to share required data with each other to detect anomalies and plug in the leakages. This financial augmentation can, in turn, fuel service delivery improvements.

Accelerating Infrastructure Development: The Power of Real-time Intelligence

Infrastructure development, a cornerstone of progressive governance, can significantly benefit from the power of information exchange. Robust liability and expenditure management, accurate revenue and expenditure forecasts, and comprehensive program monitoring become attainable objectives through this transformative approach.

As an illustrative example, envision a Finance Department that forecasts expenditures with precision through a payments calendar, meticulously synced with real-time information. The approval of project estimates can trigger a synchronised chain of events, enabling optimal resource allocation and expeditious project execution. Visibility on liabilities at an early stage can also minimise sudden unplanned demand for large sums of money.

Citizen-Centric Benefits Delivery: Minimising Inclusion and Exclusion Errors

Benefits delivery, a critical dimension of government outreach, gains unprecedented precision through information exchange. An information exchange layer can bring data from multiple departments together to ascertain eligibility and minimise inclusion and exclusion errors. Incorporating such an exchange layer also has profound implications for migrant workers, for instance. Often, these individuals face challenges accessing benefits due to the transient nature of their work. With the information exchange layer in place, their data can be maintained centrally, enabling them to access benefits through PDS irrespective of their location, ensuring continuity in benefits, and safeguarding benefits against leakages while bolstering public trust in governance.

The Finance Department (FD) in states is uniquely poised to spearhead the implementation of an information exchange layer for two reasons:

Financial Steward of the State: As the custodian of the Comprehensive Financial Management System (CFMS) and the key institution in the financial landscape of the state, the FD plays a pivotal role in ensuring the optimal utilisation of public funds. This vantage point equips the FD to champion the establishment of an information exchange layer that can revolutionise the way information flows across agencies.

Linking Outlays to Outputs and Outcomes: Leveraging its comprehensive oversight, the FD can intricately map out the trajectory from budgetary allocations to tangible outcomes. This strategic insight equips the FD to provide invaluable inputs to various Line Departments, enabling them to align policy priorities, budgets, and execution seamlessly. Furthermore, this approach empowers the FD to offer ground-level perspectives before the commencement of the next budget cycle, fostering a dynamic and effective financial planning process.

Creating Value through Information Exchange: By orchestrating this transformation through a digital information exchange layer, the administrative burden of data collation, cleaning, and curation—currently borne by officials across ministries—can be substantially alleviated. This, in turn, equips these officials to channel their efforts into profound analysis and thoughtful policy recommendations.

Key Challenge to Address: While the concept of an information exchange layer holds immense promise, it is essential to address the key challenge associated with it – concerns about data visibility. Many stakeholders hesitate to adopt solutions due to concerns about information exposure. We have addressed this challenge through an architectural foundation of robust authorizations, access limitations, security protocols, privacy measures, and inherent authentications to ensure data can be accessed only by the stakeholders who it is meant for.

The FD’s role of financial stewardship provides an innate potential to implement the vision of an information exchange layer. As we navigate the next set of reforms in governance, the concept of information exchange has the prospect to be the catalyst to unlock unprecedented governance potential. It harmonises diverse government activities, nurtures fiscal prudence, and transforms service delivery. The journey ahead promises an environment where governance is not just digital, but also interconnected, efficient, and truly transformative.

Authors: Manish Srivastava & Gautam Ravichander

"In 50 years, every street in London will be buried under nine feet of manure". The Times reported in 1894. At that time the city of London primarily commuted through horse carts and had 50000 horses that each produced 15-35 pounds of manure. This was a health nightmare for the city administration.

In 1834, Eugenio Barsanti and Felice Matteucci built the first gas-based internal combustion engine. By 1879, Carl Benz demonstrated his one-cylinder two-stroke unit built on a gas engine. By 1904, the UK was developing a motor vehicle act requiring drivers to have a license, enforcing speed limits, and penalties for reckless driving. Today, the number of registered vehicles across the world is nearing 1.5 billion. London has 2.5 million of these and the city is not covered in manure.

General purpose technologies like internal combustion engines provide a base foundation to accelerate innovation. A well-defined specification evolves around these general-purpose technologies enabling various actors to build interoperable components which can then be combined into various products to meet a variety of needs. Over time market actors compete in building better and cheaper parts with the confidence that it can work with the existing and new products.

However, this is not a blog about horses, manure and automobiles. This is about Public Finance Management.

Public Finance Management drives government and all development programs, be it through the development of public infrastructure, delivery of public services or direct benefits transfers. It does this by making the right amount of money available from various funding sources/agencies to the implementing agencies at the right time - in a fiscally sustainable and responsible manner. In order to do so, funding agencies need information from the implementation agencies on how the money is being spent. So, each flow of money from funding agencies is correlated with the flow of information from implementing agencies in the reverse direction.

Development programs are funded by various stakeholders - national governments, sub national governments, local governments, development bodies and various multi-lateral agencies. At a global scale, multi-laterals and bi-laterals fund a variety of development programs across countries. There are thousands of funding and implementing agencies across the world. Money and information needs to flow between agencies seamlessly for accelerated development - especially if we need to meet the sustainable development goals which has been set back due to the recent COVID pandemic and ongoing war.

In a manner of speaking, today's information flow is like the flow of commuters in horse carts in 19th-century London. Existing methods often lead to issues of poor quality and delays in the flow of information. This is the manure that clogs the information highways and impedes development. What is needed today is a general-purpose innovative technology like the internal combustion engine that can transform the flow of information in the public finance management space and unleash development.

We believe that fiscal information data standards equate to general-purpose technology. Fiscal information data standards can substantially increase the velocity and quality of PFM information flow - similar to how email standards (like SMTP, POP3, IMAP) help us exchange emails across the world. Fiscal Information Data standards can streamline the flow of information between funding and implementing agencies around the world. Through these standards, we can start modernising the world of PFM to bring about a more seamless and coordinated way of driving development around the world.

Overview

The Program Service is constructed using iFIX specifications and serves as an extensive platform aimed at simplifying program creation, sanction management, fund allocation, and disbursement execution. It equips organizations with essential tools to effectively oversee available funds and guarantee transparent and accountable distribution to designated beneficiaries.

Expenditure - Estimate, Plan, Bill, Payment, Debit

Debt - in progress - will be provided later.

An array of fiscal line items

Amount

CoA

Location Code - from Location Registry

Program Code - from Program Registry

Project Code - from Project Registry

Administrative Hierarchy Code from Administrative Hierarchy Registry

Start Date of Period

End Date of Period

Attachment - Attachments consist of additional attributes like key-value pairs e.g. Account Number, Correlation ID or Documents

Signature - Fiscal messages can be signed by multiple agencies and add the signature to the Signature Array that contains the below-mentioned values -

Array of Signature

System

eSign - Signed Value of the Fiscal Event/Message Body using the System Key

Purpose - Acknowledgement or Approval or Rejection

Comments

Date of Signing

mukta-ifix-adapter-persister

Mukta-ifix-Adapter

Adapter Helm

Mukta-ifix-Adapter

Adapter Environment

Mukta-ifix-Adapter

Adapter Encryption

Mukta-ifix-Adapter

Organization Service

User Service

Program Service

Encryption Service

Head of accounts to be used for the Mukta scheme at the state level - to be provided by HUDD

Spending unit details specific to each ULB are to be provided by HUDD.

HeadOfAccounts"HeadOfAccounts": [

{

"id": "1",

"code": "221705800358641045908",

"name": "General Head",

"sequence": 1,

"schemeCode": 13145,

"active": true,

"effectiveFrom": 1682164954037,

"effectiveTo": null

},

]SSUDetails"SSUDetails": [

{

"id": "1",

"ssuCode": "OLSHUD001",

"ddoCode": "OLSHUD001",

"granteeAgCode": "GOHUDULBMPL0036",

"granteeName": "ANGUL MUNICIPALITY",

"programCode": "PG/2023-24/000310",

"ssuId": "1621",

"ssuOffice": "angul_op",

"effectiveFrom": 1682164954037,

"effectiveTo": null,

"active": true

}

]Precise Revenue and Expenditure Forecasting: The exchange of timely and accurate data contributes to precise revenue and expenditure forecasting. This, when combined with Just-In-Time payments (JIT), promotes fiscal prudence and efficient resource allocation across all levels of government.

IFMS adapter manages funds summary based on the head of accounts and SSU codes. It creates sanctions for each head of accounts and SSU details based on ULB tenant ID.

Three types of transactions can be received from the JIT VA API -

Initial Allotment - A new sanction will be created only if AllotmentTxnType is Initial Allotment.

Additional allotment - For this type of transaction it will update the amount of existing sanction.

Allotment withdrawal - It deducts the transaction amount from the sanction for this type of transaction.

When a bill is approved this service creates payment using the expense service.

Some consumers keep listening to the payment create Kafka topic and generate payment instructions (PI) using payment and bill details and post the PI to the IFMS system using JIT API.

A new PI will be generated when enough funds are available for any head of accounts for that tenantId.

Before posting the PI there were multiple enrichments like bank account details, org and individual details, etc.

After creating the PI it deducts the available balance from the funds summary.

If a PI is created for any payment then the user can not generate a PI again till the PI fails.

It keeps a log of each status call of PI and saves it in the DB

Program Service takes care of Program, Sanction, Allocation and Disbursement using the standardized exchange interface.

API spec YAML is here. Click below to view it in Swagger Editor.

Base Path: /program-service/

API spec YAML is here. Click below to view it in Swagger Editor.

Allocation

Authors: Ritika Singh, MSC | Prashanth Chandramouleeswaran & Ameya Ashok Naik, eGov

How far would a dollar go if it went straight to someone in need, when they need it most? With government-to-person (G2P) payments, governments increasingly disburse welfare benefits directly to individuals or households. These high-volume, low-ticket-size G2P transactions are vital for boosting financial inclusion and empowering vulnerable communities. Globally, programs like Brazil’s Bolsa Familia and Zambia’s SWL report cost savings, leak reduction, and timely payments for grant recipients through digital payments. In India, financial inclusion has been boosted through Jan Dhan bank accounts, the use of Aadhar for identity verification, and mobile payments applications enabled by UPI, creating the possibility for government schemes to incorporate Direct Benefits Transfers (DBT).

While promising, digital G2P payments remain in their early stages, and face challenges like friction in payments, information gaps, and contextual barriers. The MUKTA scheme, in the state of Odisha in India, is an example of multi-stakeholder collaboration to reengineer digital systems and government policies and processes to operationalise digitally-enabled Smart Contracting and Just-in-Time Funding Systems (JiT-FS). This has led to enhanced reach, improved observability, and a clear understanding of performance bottlenecks, empowering stakeholders to continuously refine and optimise the system.

MUKTA is a pioneering initiative, designed to generate wage employment for vulnerable workers in urban Odisha during the COVID-19 pandemic. Rooted in community needs and responsive to local demands, MUKTA leverages existing Community Based Organizations (CBOs) to execute public works projects which are sustainable and climate resilient. These CBOs enlist wage seekers to work on these projects, generating income for the latter.

Delayed payments to CBOs and wage seekers were a key scheme implementation challenge. Beneficiaries faced lengthy wait times, with a baseline study across two pilot Urban Local Bodies (ULBs) finding that over 50% of completed tasks were not processed for payment, and the rest encountered delays exceeding one month. Wage seekers come from low-income households, and such delays undermine the scheme’s welfare objectives.

Such delays arise largely from cumbersome, paper-based compliances, billing, and verification processes. Every step – from attendance tracking and bill submission, to verification, approvals, and payment instructions – relied on manual processes. This increased the administrative burden on local government personnel, who are often already overburdened. These long-drawn processes also resulted in underutilisation of sanctioned funding, with funds parked idle in banks and limited transparency.

These challenges threatened to undermine MUKTA's goals. It was clear that a transformative intervention was required. To address these challenges, MUKTA embraced a three-phase "Smart Payment" approach:

Extensive consultations with stakeholders at each tier of government – Finance Department (FD), Housing and Urban Development (H&UD) Department, local government officials, and CBO representatives – helped identify key requirements for completing payments under the MUKTA scheme, and administrative inefficiencies that prevented timely completion.

Such schemes require multi-entity coordination: project definition, estimate creation, progress monitoring, and bill approval take place at the local government level; sanctioning overall fund tranches and executing electronic payments is done by the State government. A first step in the solution was identifying what information was needed for the State to disburse payments, and how it could be communicated most efficiently. Existing Integrated Financial Management System (IFMS) and Public Finance Management System (PFMS) data standards were leveraged to develop functional requirement specifications, leading to the development of a two-part solution: , which streamlines processes and reporting by scheme implementers, and JiT-FS (Just-in-Time Funding System), which simplifies and speeds up fund disbursal.

MUKTASoft is a workfare scheme management platform, which streamlines project management and information flow among CBOs, local government bodies, H&UD, and FD. The expedited flow of standardised project information is key to reducing delays in payments. Key operational components of MUKTASoft include project finalisation, wage-seeker registration, digital attendance tracking, expense logging, and payment verification, all streamlined through user-friendly interfaces for different sets of users (CBO, ULB official, etc.)

Keeping in mind the administrative burden-related challenges identified, MUKTASoft was designed to improve overall efficiency of local and state government officials by:

Simplifying the process of project progress tracking and wage payment approval, aiming to move from over twelve steps (as identified in the baseline) to a simple three-step (maker-checker-approver) approach for each key document.

Enhancing visibility of project progress or delays in payments, ensuring that the responsible authority/ individual takes accountability for these, and enabling them to take steps to resolve these.

Enabling faster payments to wage seekers using smart payments through direct integration with the state IFMS

Aligning project management and scheme verifications sets the stage for integrating smart payments, utilising digital technologies such as JiT-FS for pull-based release of funds once project milestones are achieved. Core PFM principles like "single source of truth" (ensuring data accuracy), "observability" (real-time tracking of performance), and "minimising administrative burden" through smart contracts have been woven into the design of both MUKTASoft and JiT-FS, strengthening transparency, accountability, and efficiency.

The implementation phase required managing multiple dependencies. One of the fundamental challenges was building trust and reliability in the solution, to replace manual ways of working, by showcasing high success rates. The deployment timeline faced disruptions, and development hurdles were common initially – as is typical when new systems are integrating for the first time. Collaborative testing uncovered edge cases affecting transaction success rates, and a robust User Testing phase helped to validate various scenarios and user needs.

Checks and balances within MUKTASoft and the JiT module were introduced and implemented to address these emerging issues. For instance, at the project finalisation stage, MUKTASoft checks for availability of funds to cover the expenses of that project. This sanction validation establishes a spending ceiling before approval of projects or payments – a key control that the State Finance Department must maintain. Similarly, when payments are directly transferred to wage-seekers' bank accounts, each transaction's success or failure must be checked, and corrective measures taken where payments have failed to go through.

Local governments, while initially hesitant, saw that using MUKTASoft for project creation, estimate approval, and work orders had tangible benefits: reduced administrative burden, fewer delays, faster payments, and enhanced accountability. With clear demonstration of the value to all stakeholders, adoption of the solution became more universal.

Reduced delays: Digitising processes from attendance tracking to payment verification dramatically streamlined workflows, facilitating disbursement of payments to wage seekers and CBOs. Pilot data indicates around 60% reduction in payment disbursement time for wage seekers. Under the new system, beneficiaries are no longer waiting months to get paid, with most bills now being processed in a matter of days.

Enhanced efficiency: Automation freed up local government officials from administrative burdens, allowing them to focus on project management and monitoring, leading to improved project execution and resource utilisation.

Transparency and data-driven decision making: The integrated data platform enabled real-time tracking of project progress, expenditure, and beneficiary coverage, enhancing transparency and accountability for all stakeholders. For administrators, insights from real-time data facilitated informed decision-making, allowing for quicker identification and resolution of bottlenecks, and strategic allocation of constrained resources.

With the pilot successful in 2 ULBs, the system is now being scaled to a total of 25 (out of 115) Urban Local Bodies (ULBs) in Odisha. MUKTA's journey offers valuable lessons for other G2P initiatives seeking to leverage technology for efficient PFM and inclusive development:

Existing infrastructure matters: Enrolment of wage seekers is simplified by using Aadhaar for identity verification. With Aadhaar in turn linked to Jan Dhan accounts, mapping of wage seekers to bank accounts is already in place. Widespread use of UPI addresses concerns around cash in / cash out. MUKTASoft and JIT-FS are a new wave of innovations, relying on building blocks provided by IndiaStack and related reforms.

Process streamlining is a key lever for any tech-enabled reform: When workflows are digitised, with automation and minimised manual intervention, the time and effort users have to spend on administrative tasks decreases, and they can focus on tasks that require human interaction or attention. At the same time, the reliability and timeliness of data improves, enabling administrators to better manage performance, and policy makers to develop data-driven plans and strategies.

Active and consistent collaboration: Strong partnerships between government, technology providers, and frontline users (including grassroots organisations) are essential for successful implementation. The solution will be used when stakeholders feel ownership over it, for which they should be involved throughout the process, with the needs they articulate being prioritised in product design.

By adopting these lessons and building upon MUKTA's success, other G2P programs can unlock the transformative potential of technology to deliver effective social safety nets and empower vulnerable communities across the globe.

The early success observed with smart payments for the MUKTA scheme underscores the transformative potential of technology in G2P transactions to reduce delays in service delivery and heighten public finance efficiency. It serves as a model for government agencies, prompting them to adopt Smart Payments as a catalyst for inclusive development.

Odisha's journey sets a model for an efficient and citizen-centric benefit delivery system, emphasising the need for adaptive strategies, collaborative partnerships, and a commitment to governance principles. Tailored to the local context, the solution can progress from basic Smart Payments, encompassing digital recording of conditional payments and compliances, to a medium level incorporating workflow management systems and APIs. The ultimate moonshot solution integrates AI/ML models, smart devices, and Internet-of-Things (IoT) to revolutionise the public finance landscape.

Empowering wage seekers: Timely wage payments and improved project execution under the Smart Payment system contributed to enhanced livelihood opportunities and overall well-being for urban communities.

Data fragmentation undermines progress at all stages: All stakeholders must accept a "single source of truth", which they contribute to by ensuring that all transactions take place through a single system of record. While some transition period may be needed to achieve this goal, even in this time, data should be updated in the system of record promptly, with appropriate verification / triangulation.

Adoption requires users to trust the new way of working: Adoption is not instant; extensive training and support is essential for overcoming initial resistance. As each set of stakeholders see the benefits from the new system, they will be more likely to use it, and to advocate for it with their peers. This can also be leveraged in periodic re-training, as trained resources move and new resources join the department.

Adaptability is essential: Unforeseen challenges are inevitable. Embracing flexibility and a willingness to find solutions are crucial for successful implementation.

Jasminder Saini

CEGIS

Department of Social Security, Women & Child Development, Punjab

CBGA, CSEP etc (get confirmation from Ameya)

Department of Local Government, Punjab

ODI

Department of Public Works, Punjab

PD (Public Digital)

Department of Social Justice, Empowerment and Minorities

ThinkWell

Department of Governance Reforms, Punjab

mGramSeva

Pradeep Kumar

Jasminder Saini

J-PAL

Water Supply and Sanitation Department, Punjab

Saloni Bajaj

Aveek De

IIM-B

PSPCL

Debasish Chakaraborty

Ajay Bansal

PayGov

Ramkrishana Sahoo

Snehal Gothe

MuktaSoft

Nirbhay Singh

Prashanth C

Janaagraha

State Urban Development Agency, Odisha

Arindam Gupta

Dr. Subrata Biswal

MSC

Housing and Urban Development Department, Odisha

Elzan

Sameer Khurana

E&Y - PMU (HUDD)

ULBs- urban Local Bodies, Odisha (Jatni and Dhenkanal)

Subhashini

Sourav Mohanty

CBOs(SHGs/SDAs)

DTI Odisha

iFIX

Satish N

Prashanth C

BMGF

Finance Department, Punjab

Shailesh Kumar

Sameer Khurana

Janaagraha

Department of Rural Development and Panchayats, Punjab

This guide provides step-by-step instructions for installing iFIX using GitHub Actions in an AWS environment.

Github account - signup

Kubectl installed in the system -

AWS account -

Install AWS CLI locally -

Postman - and

A domain host - (example: GoDaddy to configure your server to a domain)

Prepare AWS IAM User

Create an IAM User in your AWS account -

Generate ACCESS_KEY and SECRET_KEY for the IAM user -

Assign administrator access to the IAM user for necessary permissions.

Fork the following repositories with all the branches into your organisation account on :

(We do not need the master data repo since we are using the MDMS-v2 by default with data seeded)

Go to the forked DIGIT-DevOps repository:

Navigate to the repository settings.

Go to Secrets and Variables.

Click on the actions options below secrets and variables.

On the new page, choose the new repository secret option in repository secrets and add the following keys mentioned below:

Navigate to the release-githubactions branch in the forked DevOps repository.

Enable GitHub Actions.

Click on Actions, then click on "I understand my workflows, go ahead and enable them":

The following steps can be done directly in the browser or the local system if you are familiar with Git usage.

Before following any of the steps switch to the release-githubactions branch.

Steps to edit the git repository in the browser -

Steps to edit in the local system if you are familiar with Git basics:

Git clone {forked DevOps repolink}

Follow the below steps and make changes

Navigate to egov-demo.yaml (config-as-code/environments/egov-demo.yaml).

Under the egov-persister: change the gitsync link of the configs repository to the forked config repository and the branch to UNIFIED-DEV.

Under the egov-indexer: change the gitsync link of the configs repository to the forked config repository and the branch to UNIFIED-DEV.

Navigate to infra-as-code/terraform/sample-aws.

Open input.yaml and enter details such as domain_name, cluster_name, bucket_name, and db_name.

Navigate to file deploy-as-code/deployer/digit_installer.go

Search for ifix-demo in the file and check for health-demo-vX.X

Change the version to v1.1-> ifix-demo-v1.1

Generate SSH key pair.

How to Generate SSH Key Pair - choose one of the following methods to generate an SSH key pair:

Method a: Use an online website. (Note: This is not recommended for production setups, only for demo purposes):

Method b: Use OpenSSL commands:

Once all details are entered, push these changes to the remote GitHub repository. Open the Actions tab in your GitHub account to view the workflow. You should see that the workflow has started, and the pipelines are completed successfully.

Connect to the Kubernetes cluster, from your local machine by using the following command:

Get the CNAME of the nginx-ingress-controller

The output of this will be something like this:

Add the displayed CNAME to your domain provider against your domain name. e.g. GoDaddy domain provider -

After connecting to the Kubernetes cluster, edit the deployment of the FileStore service using the following command:

The deployment.yaml for Filestore Service will open in VS Code, add the aws key and secret key provided to you in the way shown below:

Close the deployment.yaml file opened in your VS Code editor and the deployment will be updated.

Set up the AWS profile locally by running the following commands:

aws configure --profile {profilename}

fill in the key values as they are prompted

AWS_ACCESS_KEY_ID: <GENERATED_ACCESS_KEY>

AWS_SECRET_ACCESS_KEY: <GENERATED_SECRET_KEY>

AWS_DEFAULT_REGION: ap-south-1

export AWS_PROFILE={profilename}

AWS_ACCESS_KEY_ID: <GENERATED_ACCESS_KEY>

AWS_SECRET_ACCESS_KEY: <GENERATED_SECRET_KEY>

AWS_DEFAULT_REGION: ap-south-1

AWS_REGION: ap-south-1

Then commit and push to the release-githubactions branch

openssl genpkey -algorithm RSA -out private_key.pem

ssh-keygen -y -f private_key.pem > ssh_public_key

To view the key run the commands or use any text editor to open the files

vi private_key.pem

vi ssh_public_key

Once generated Navigate to config-as-code/environments

Open egov-demo-secrets.yaml

Search for PRIVATE KEY and replace from -----BEGIN RSA PRIVATE KEY----- to -----BEGIN RSA PRIVATE KEY----- with private_key generated (note: please make sure the private key is indented as given)

Add the public_key to your GitHub account - Git guide

aws eks update-kubeconfig --region ap-south-1 --name $CLUSTER_NAMEkubectl get svc nginx-ingress-controller -n egov -o jsonpath='{.status.loadBalancer.ingress[0].hostname}'export KUBE_EDITOR='code --wait'

kubectl edit deployment egov-filestore -n egovEnables exchange of program, on-program, sanction, on-sanction etc

Signature of {header}+{message} body verified using sender's signing public key

TgE1hcA2E+YPMdPGz4vveKQpR0x+pgzRTlet52qh63Kekr71vWWScXqaRFtQW64uRFZGBUhHYYZQ2y6LffwnNOOQhhssaThhqVBhXNEwX9i75SNYXi5XSJVDYzSyHrhF20HW6RE9mAVWdc80i7d+FXlh+b/U+fnj+SrZ2s6Xd0WUZvU29LgqeUpyznlWLu1mDdJxNZavsDLWmxjTnknqBjDvwSc35WhFDhXDA2lWmm8YpZ1Y6TBmvvtVS7mAOTnhFy9sdCbrLcfXk5QWIsdzlvPqlkvdwEf30OZ6ewb680Aj3hO2OT5LCv7iLyz7C7srnB9lJT5gXiw+eSnktPXlDA=={"location_code":"pg.citya","name":"Test 1","start_date":0,"end_date":0,"client_host_url":"https://unified-dev.digit.org","function_code":"string","administration_code":"string","recipient_segment_code":"string","economic_segment_code":"string","source_of_fund_code":"string","target_segment_code":"string","currency_code":"string","locale_code":"string","status":{"status_code":"RECEIVED","status_message":"string"}}HTTP layer error details

HTTP layer error details

HTTP layer error details

Acknowledgement of message received after successful validation of message and signature

{

"errors": [

{

"code": "text",

"message": "text"

}

]

}POST /digit-exchange/v1/exchange/EXCHANGE_TYPE HTTP/1.1

Host:

Content-Type: application/json

Accept: */*

Content-Length: 1107

{

"signature": "TgE1hcA2E+YPMdPGz4vveKQpR0x+pgzRTlet52qh63Kekr71vWWScXqaRFtQW64uRFZGBUhHYYZQ2y6LffwnNOOQhhssaThhqVBhXNEwX9i75SNYXi5XSJVDYzSyHrhF20HW6RE9mAVWdc80i7d+FXlh+b/U+fnj+SrZ2s6Xd0WUZvU29LgqeUpyznlWLu1mDdJxNZavsDLWmxjTnknqBjDvwSc35WhFDhXDA2lWmm8YpZ1Y6TBmvvtVS7mAOTnhFy9sdCbrLcfXk5QWIsdzlvPqlkvdwEf30OZ6ewb680Aj3hO2OT5LCv7iLyz7C7srnB9lJT5gXiw+eSnktPXlDA==",

"header": {

"message_id": "123",

"message_ts": "text",

"action": "create",

"sender_id": "program@https://spp.example.org",

"sender_uri": "https://spp.example.org/{namespace}/callback/on-create",

"receiver_id": "program@https://pymts.example.org",

"is_msg_encrypted": false

},

"message": "{\"location_code\":\"pg.citya\",\"name\":\"Test 1\",\"start_date\":0,\"end_date\":0,\"client_host_url\":\"https://unified-dev.digit.org\",\"function_code\":\"string\",\"administration_code\":\"string\",\"recipient_segment_code\":\"string\",\"economic_segment_code\":\"string\",\"source_of_fund_code\":\"string\",\"target_segment_code\":\"string\",\"currency_code\":\"string\",\"locale_code\":\"string\",\"status\":{\"status_code\":\"RECEIVED\",\"status_message\":\"string\"}}"

}Create programs in the system

Signature of {header}+{message} body verified using sender's signing public key

TgE1hcA2E+YPMdPGz4vveKQpR0x+pgzRTlet52qh63Kekr71vWWScXqaRFtQW64uRFZGBUhHYYZQ2y6LffwnNOOQhhssaThhqVBhXNEwX9i75SNYXi5XSJVDYzSyHrhF20HW6RE9mAVWdc80i7d+FXlh+b/U+fnj+SrZ2s6Xd0WUZvU29LgqeUpyznlWLu1mDdJxNZavsDLWmxjTnknqBjDvwSc35WhFDhXDA2lWmm8YpZ1Y6TBmvvtVS7mAOTnhFy9sdCbrLcfXk5QWIsdzlvPqlkvdwEf30OZ6ewb680Aj3hO2OT5LCv7iLyz7C7srnB9lJT5gXiw+eSnktPXlDA==HTTP layer error details

HTTP layer error details

HTTP layer error details

Acknowledgement of message received after successful validation of message and signature

Enables exchange of program related messages

Signature of {header}+{message} body verified using sender's signing public key

TgE1hcA2E+YPMdPGz4vveKQpR0x+pgzRTlet52qh63Kekr71vWWScXqaRFtQW64uRFZGBUhHYYZQ2y6LffwnNOOQhhssaThhqVBhXNEwX9i75SNYXi5XSJVDYzSyHrhF20HW6RE9mAVWdc80i7d+FXlh+b/U+fnj+SrZ2s6Xd0WUZvU29LgqeUpyznlWLu1mDdJxNZavsDLWmxjTnknqBjDvwSc35WhFDhXDA2lWmm8YpZ1Y6TBmvvtVS7mAOTnhFy9sdCbrLcfXk5QWIsdzlvPqlkvdwEf30OZ6ewb680Aj3hO2OT5LCv7iLyz7C7srnB9lJT5gXiw+eSnktPXlDA==HTTP layer error details

HTTP layer error details

HTTP layer error details

Acknowledgement of message received after successful validation of message and signature

Creates estimate for the program.

Signature of {header}+{message} body verified using sender's signing public key

TgE1hcA2E+YPMdPGz4vveKQpR0x+pgzRTlet52qh63Kekr71vWWScXqaRFtQW64uRFZGBUhHYYZQ2y6LffwnNOOQhhssaThhqVBhXNEwX9i75SNYXi5XSJVDYzSyHrhF20HW6RE9mAVWdc80i7d+FXlh+b/U+fnj+SrZ2s6Xd0WUZvU29LgqeUpyznlWLu1mDdJxNZavsDLWmxjTnknqBjDvwSc35WhFDhXDA2lWmm8YpZ1Y6TBmvvtVS7mAOTnhFy9sdCbrLcfXk5QWIsdzlvPqlkvdwEf30OZ6ewb680Aj3hO2OT5LCv7iLyz7C7srnB9lJT5gXiw+eSnktPXlDA==HTTP layer error details

HTTP layer error details

HTTP layer error details

Acknowledgement of message received after successful validation of message and signature

User can update the estimate if already created using on-estimate.

Signature of {header}+{message} body verified using sender's signing public key

TgE1hcA2E+YPMdPGz4vveKQpR0x+pgzRTlet52qh63Kekr71vWWScXqaRFtQW64uRFZGBUhHYYZQ2y6LffwnNOOQhhssaThhqVBhXNEwX9i75SNYXi5XSJVDYzSyHrhF20HW6RE9mAVWdc80i7d+FXlh+b/U+fnj+SrZ2s6Xd0WUZvU29LgqeUpyznlWLu1mDdJxNZavsDLWmxjTnknqBjDvwSc35WhFDhXDA2lWmm8YpZ1Y6TBmvvtVS7mAOTnhFy9sdCbrLcfXk5QWIsdzlvPqlkvdwEf30OZ6ewb680Aj3hO2OT5LCv7iLyz7C7srnB9lJT5gXiw+eSnktPXlDA==HTTP layer error details

HTTP layer error details

HTTP layer error details

Acknowledgement of message received after successful validation of message and signature

Create sanction request in the system, this sanction is linked with program.

Signature of {header}+{message} body verified using sender's signing public key

TgE1hcA2E+YPMdPGz4vveKQpR0x+pgzRTlet52qh63Kekr71vWWScXqaRFtQW64uRFZGBUhHYYZQ2y6LffwnNOOQhhssaThhqVBhXNEwX9i75SNYXi5XSJVDYzSyHrhF20HW6RE9mAVWdc80i7d+FXlh+b/U+fnj+SrZ2s6Xd0WUZvU29LgqeUpyznlWLu1mDdJxNZavsDLWmxjTnknqBjDvwSc35WhFDhXDA2lWmm8YpZ1Y6TBmvvtVS7mAOTnhFy9sdCbrLcfXk5QWIsdzlvPqlkvdwEf30OZ6ewb680Aj3hO2OT5LCv7iLyz7C7srnB9lJT5gXiw+eSnktPXlDA==HTTP layer error details

HTTP layer error details

HTTP layer error details

Acknowledgement of message received after successful validation of message and signature

Update staus of created sanciton request.

Signature of {header}+{message} body verified using sender's signing public key

TgE1hcA2E+YPMdPGz4vveKQpR0x+pgzRTlet52qh63Kekr71vWWScXqaRFtQW64uRFZGBUhHYYZQ2y6LffwnNOOQhhssaThhqVBhXNEwX9i75SNYXi5XSJVDYzSyHrhF20HW6RE9mAVWdc80i7d+FXlh+b/U+fnj+SrZ2s6Xd0WUZvU29LgqeUpyznlWLu1mDdJxNZavsDLWmxjTnknqBjDvwSc35WhFDhXDA2lWmm8YpZ1Y6TBmvvtVS7mAOTnhFy9sdCbrLcfXk5QWIsdzlvPqlkvdwEf30OZ6ewb680Aj3hO2OT5LCv7iLyz7C7srnB9lJT5gXiw+eSnktPXlDA==HTTP layer error details

HTTP layer error details

HTTP layer error details

Acknowledgement of message received after successful validation of message and signature

User can request to create an allocation for sanction.

Signature of {header}+{message} body verified using sender's signing public key

TgE1hcA2E+YPMdPGz4vveKQpR0x+pgzRTlet52qh63Kekr71vWWScXqaRFtQW64uRFZGBUhHYYZQ2y6LffwnNOOQhhssaThhqVBhXNEwX9i75SNYXi5XSJVDYzSyHrhF20HW6RE9mAVWdc80i7d+FXlh+b/U+fnj+SrZ2s6Xd0WUZvU29LgqeUpyznlWLu1mDdJxNZavsDLWmxjTnknqBjDvwSc35WhFDhXDA2lWmm8YpZ1Y6TBmvvtVS7mAOTnhFy9sdCbrLcfXk5QWIsdzlvPqlkvdwEf30OZ6ewb680Aj3hO2OT5LCv7iLyz7C7srnB9lJT5gXiw+eSnktPXlDA==HTTP layer error details

HTTP layer error details

HTTP layer error details

Acknowledgement of message received after successful validation of message and signature

Update created allocation staus

Signature of {header}+{message} body verified using sender's signing public key

TgE1hcA2E+YPMdPGz4vveKQpR0x+pgzRTlet52qh63Kekr71vWWScXqaRFtQW64uRFZGBUhHYYZQ2y6LffwnNOOQhhssaThhqVBhXNEwX9i75SNYXi5XSJVDYzSyHrhF20HW6RE9mAVWdc80i7d+FXlh+b/U+fnj+SrZ2s6Xd0WUZvU29LgqeUpyznlWLu1mDdJxNZavsDLWmxjTnknqBjDvwSc35WhFDhXDA2lWmm8YpZ1Y6TBmvvtVS7mAOTnhFy9sdCbrLcfXk5QWIsdzlvPqlkvdwEf30OZ6ewb680Aj3hO2OT5LCv7iLyz7C7srnB9lJT5gXiw+eSnktPXlDA==HTTP layer error details

HTTP layer error details

HTTP layer error details

Acknowledgement of message received after successful validation of message and signature

Create new disbursement request to initiate payment.

Signature of {header}+{message} body verified using sender's signing public key

TgE1hcA2E+YPMdPGz4vveKQpR0x+pgzRTlet52qh63Kekr71vWWScXqaRFtQW64uRFZGBUhHYYZQ2y6LffwnNOOQhhssaThhqVBhXNEwX9i75SNYXi5XSJVDYzSyHrhF20HW6RE9mAVWdc80i7d+FXlh+b/U+fnj+SrZ2s6Xd0WUZvU29LgqeUpyznlWLu1mDdJxNZavsDLWmxjTnknqBjDvwSc35WhFDhXDA2lWmm8YpZ1Y6TBmvvtVS7mAOTnhFy9sdCbrLcfXk5QWIsdzlvPqlkvdwEf30OZ6ewb680Aj3hO2OT5LCv7iLyz7C7srnB9lJT5gXiw+eSnktPXlDA==HTTP layer error details

HTTP layer error details

HTTP layer error details

Acknowledgement of message received after successful validation of message and signature

Updated status of create disburse request

Signature of {header}+{message} body verified using sender's signing public key

TgE1hcA2E+YPMdPGz4vveKQpR0x+pgzRTlet52qh63Kekr71vWWScXqaRFtQW64uRFZGBUhHYYZQ2y6LffwnNOOQhhssaThhqVBhXNEwX9i75SNYXi5XSJVDYzSyHrhF20HW6RE9mAVWdc80i7d+FXlh+b/U+fnj+SrZ2s6Xd0WUZvU29LgqeUpyznlWLu1mDdJxNZavsDLWmxjTnknqBjDvwSc35WhFDhXDA2lWmm8YpZ1Y6TBmvvtVS7mAOTnhFy9sdCbrLcfXk5QWIsdzlvPqlkvdwEf30OZ6ewb680Aj3hO2OT5LCv7iLyz7C7srnB9lJT5gXiw+eSnktPXlDA==HTTP layer error details

HTTP layer error details

HTTP layer error details

Acknowledgement of message received after successful validation of message and signature

Create new demand request.